- Understanding the animal kingdom

- Deciphering whale talk

- What does the dog say?

- The hidden meaning behind cats’ expressions

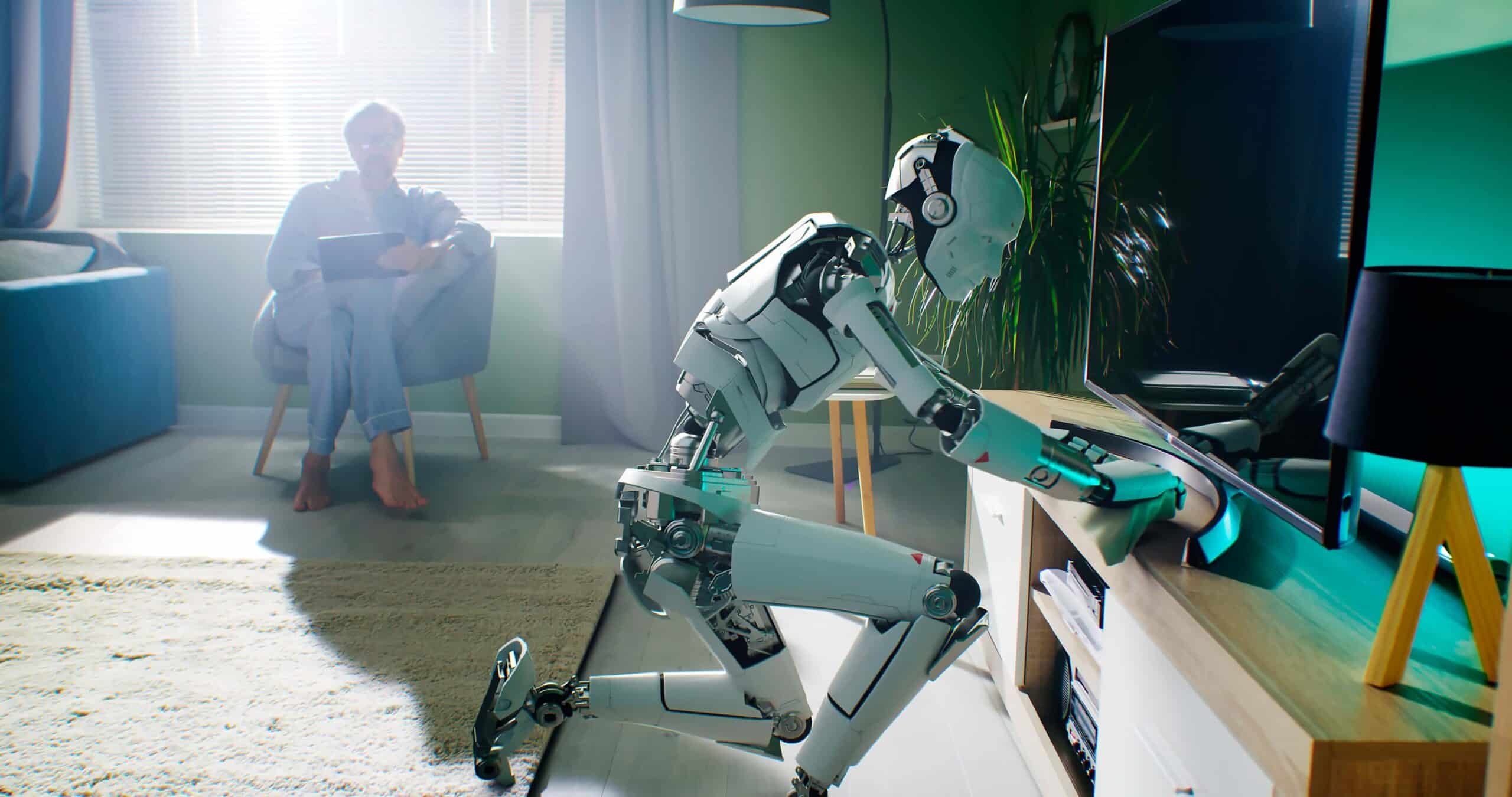

For centuries, humans have been captivated by the animal kingdom, yearning to understand the complex languages and behaviours of the creatures with whom we share this planet. From the haunting melodies of whale songs to the intricate dance of honeybees, the natural world is alive with communication that has long remained a mystery to us. But now, thanks to the rapid advancements in artificial intelligence (AI) technology, we may be on the verge of unlocking the secrets of animal communication. By training sophisticated algorithms on vast collections of animal sounds and behavioural data, researchers are developing systems capable of interpreting and even responding to the unique signals used by various species. “This is slowly opening our minds not only to the wonderful sounds that nonhumans make but to a fundamental set of questions about the so-called divide between humans and nonhumans, our relationship to other species. It’s also opening up new ways to think about conservation and our relationship to the planet. It’s pretty profound”, says Karen Bakker, a professor at the University of British Columbia.

This groundbreaking approach has the potential to significantly improve our understanding of animal cognition, social structures, and emotional states. It could enable us to discern when an animal is in distress or gain insights into their preferred habitats and social dynamics. Such knowledge could have profound implications for animal welfare and our overall relationship with the natural world. “Understanding what animals say is the first step to giving other species on the planet ‘a voice’ in conversations on our environment”, says Kay Firth-Butterfield, the World Economic Forum’s head of AI and machine learning. Moreover, the ability to communicate with animals could unlock a wealth of information about the ecosystems they inhabit, providing valuable data for environmental research and conservation initiatives. As we continue to grapple with the impacts of climate change and habitat loss, understanding the perspectives of the creatures most affected could be a gamechanger.

“These tools are going to change the way that we see ourselves in relation to everything”.

Aza Raskin, the founder of the Earth Species Project

Understanding the animal kingdom

Founded by Aza Raskin and Britt Selvitelle, the Earth Species Project is a nonprofit organisation that brings together a diverse team of AI scientists, biologists, and conservation experts to collect and analyse vast amounts of animal communication data and uncover the hidden meaning behind it. Imagine a future where nature documentaries no longer rely on human narration to explain the behaviour of animals on screen but instead feature subtitles that directly translate their vocalisations. This could provide viewers with an unprecedented level of insight into the complex social dynamics and emotional lives of the creatures they are observing. The implications of this research extend far beyond satisfying our curiosity about the animal kingdom, though. According to Raskin, being able to communicate with animals could fundamentally alter our perception of our place in the natural world. As we begin to recognise the richness and complexity of animal language, we may be forced to deal with the fact that humans are not the only species capable of sophisticated communication and cognition. “These tools are going to change the way that we see ourselves in relation to everything”, he adds.

Of course, the path to achieving this level of understanding is not without obstacles. As Raskin and Selvitelle discovered during their early years of collaborating with biologists, one of the primary challenges facing researchers in this field is the enormous amount of data collected by modern recording devices, which is nearly impossible to process manually. However, by using machine learning algorithms, researchers could process these massive datasets more efficiently, allowing them to identify and categorise various animal vocalisations. Earth Species Project’s early work tackled the so-called ‘cocktail party problem’, which has plagued animal communication research for years. In situations where large groups of animals are vocalising simultaneously, such as schools of dolphins in the open sea, it can be incredibly difficult to distinguish individual voices. But by training a neural network model on an extensive database of bottlenose dolphin signature whistles, the organisation managed to develop a system that can separate overlapping animal voices, a breakthrough that could forever change the study of animal communication in complex social settings.

More recently, the Earth Species Project has turned its attention to foundation models, the same technology that powers generative AI models. Their first foundation model for animal communication has already demonstrated success in sorting beluga whale calls, and the organisation plans to expand its application to a wide range of species, from orangutans to elephants to jumping spiders. The organisation is also working on developing a system that can generate realistic animal sounds and even engage in rudimentary conversations with creatures in their own languages. While this could certainly be a step towards deciphering what animal calls truly mean, it may be a while before we actually get there. “I don’t believe that we will have an English-Dolphin translator, OK? Where you put English into your smartphone, and then it makes dolphin sounds, and the dolphin goes off and fetches you some sea urchin”, says Felix Effenberger, a senior AI research adviser for Earth Species. “The goal is to first discover basic patterns of communication”.

Deciphering whale talk

Sperm whales are renowned for having the largest brains in the animal kingdom, as well as a complex social structure and sophisticated communication system. They typically congregate in family groups at the surface of the water, where they communicate with one another using distinctive sequences of clicks called codas. These codas, which are best described as resembling Morse code, have now become the primary focus of research conducted by David Gruber and Shane Gero, scientists involved in the Cetacean Translation Initiative. Also known as Project Ceti, this collective of animal scientists and machine-learning experts aims to use cutting-edge AI algorithms to decipher the intricate language of sperm whales, bridging the gap between human and animal understanding.

One of the primary challenges faced by researchers in this field is the sheer volume of data required to train generative AI algorithms. Just as language models like GPT rely on billions of words to learn and generate human-like text, the development of AI systems that can understand and mimick sperm whale communication demands a massive influx of acoustic data. Despite decades of dedicated research, the scientific community has only scratched the surface of the vast repository of animal vocalisations, highlighting the need for innovative data collection methods. Traditionally, researchers have relied on hydrophones deployed from boats to capture snippets of sperm whale conversations. While this approach has produced valuable insights, it also has its limitations, as it provides only a narrow glimpse into the rich social lives of these magnificent animals. Much like studying human language through recordings captured in a limited set of locations, this method risks overlooking the true complexity and diversity of sperm whale communication. “If you only study English-speaking society and you’re only recording in a dentist’s office, you’re going to think the words root canal and cavity are critically important to English-speaking culture, right?” says Gero.

To overcome this problem, Project Ceti is installing a continuous recording setup off the coast of Dominica in the Caribbean. By strategically placing microphones on buoys, sending robotic fish and aerial drones to follow the whales, and attaching tags to monitor their movements, heartbeats, and vocalisations, the team aims to gather an unprecedented volume of data on sperm whale behaviour and communication. Project Ceti estimates that their new recording setup could gather a staggering 400,000 times more data in a single year than Gero has managed to collect over his entire career. This wealth of information could provide unparalleled insights into the social lives and cultural practices of sperm whales. Previous research has already revealed the existence of distinct dialects among sperm whale populations, with different clans self-identifying using unique coda patterns. As Gero notes, this highlights the crucial role that social bonds play in shaping animal communication, emphasising the importance of belonging and group identity. While the algorithms may not be able to translate codas into human language anytime soon, they will help researchers identify which calls are likely to be most meaningful to the whales, allowing them to focus their efforts on deciphering the most critical aspects of sperm whale language and social dynamics.

What does the dog say?

Dogs have long been celebrated as man’s best friend, offering unwavering loyalty, companionship, and unconditional love to their ‘hoomans’. However, despite the deep bond that exists between humans and their canine companions, one significant barrier has persisted throughout the ages: the inability to fully understand each other’s language. While dogs have evolved to be highly attuned to human emotions and body language, the intricacies of their own communication system have largely remained a mystery to us. But that may be about to change soon, thanks to the work of pioneering individuals like Con Slobodchikoff, an animal behaviourist and the CEO of Zoolingua, who has dedicated his career to studying the complexities of animal communication, particularly that of prairie dogs.

“Over a series of experiments, we have found that prairie dogs have a very sophisticated system of communication that I’m comfortable calling language”, he says. “They have all of the features that linguists say you have to find in an animal communication system to call it language”. According to Slobodchikoff, a prairie dog’s alarm call can convey detailed information about approaching predators, including their species, size, shape, and speed, all with a single chirp. If the predator is a human, the alarm call can even describe the clothes they are wearing.

Building upon this groundbreaking research, Slobodchikoff is now focusing his efforts on developing translation technology that can interpret both the verbal and body language of dogs and other domestic animals. “The idea is that we have a device that you can point to a dog, and the device analyses the dog’s body language and vocal signals and says, ‘I’m hungry, or please let me out, I need to pee, or you’re scaring me,’ or something along those lines”, he explains. This technology has the potential to revolutionise the way we interact with our canine companions, fostering a deeper understanding and connection between species and ultimately strengthening the bond that has earned dogs their title as man’s best friend.

“We could use AI to teach us a lot about what animals are trying to say to us”.

Daniel Mills, a professor of veterinary behavioural medicine at the University of Lincoln

The hidden meaning behind cats’ expressions

While some researchers are exploring the potential of AI to decipher the barks and body language of our canine friends, others are turning their attention to another beloved pet: cats. Cat owners have long grappled with the enigmatic nature of their feline friends, attempting to decipher the subtle nuances of their meows, postures, and facial expressions. Recent studies have revealed that cats employ a vast array of expressions in their interactions with both humans and their own species. However, the expressions they use with humans are different from those they use with other cats, adding another layer of intricacy to the puzzle. This complexity in feline facial expressions has prompted researchers like Daniel Mills, a professor of veterinary behavioural medicine at the University of Lincoln, to explore how AI can help us better understand what our cats are ‘saying’. “We could use AI to teach us a lot about what animals are trying to tell us”, says Mills.

Essentially, there are two ways to approach this challenge. The first involves training AI to recognise specific features, such as the position of a cat’s ears, which have long been associated with particular emotional states. By focusing on these well-established markers, AI can begin to build a comprehensive understanding of the subtle nuances that define feline communication. The second, more modern approach is to allow AI to devise its own classification rules, which may unearth previously unrecognised patterns and connections, shedding light on the most effective ways to distinguish between different emotional states. The potential applications of this groundbreaking technology extend far beyond our homes. AI could serve as a powerful tool for monitoring the wellbeing of many other animals, such as cows during their daily milking routine. By analysing facial expressions for signs of pain or distress, AI could provide farmers with a real-time assessment of their animals’ health, enabling timely interventions and improving overall welfare.

Limitations and ethical concerns

While the ability to communicate with animals does offer some very exciting possibilities, it also raises questions about the unintended consequences of such achievements. There is no doubt that the scientists involved in organisations like the Earth Species Project are genuinely passionate about the animal kingdom, but others may be driven by less noble motives. Karen Bakker warns that the technology could be exploited by commercial industries and poachers to locate the animals more effectively or trick them into coming closer by playing the sounds of their calls back to them. This could have devastating consequences for already vulnerable animal populations. It is, therefore, imperative to introduce best-practice guidelines and strict legislative frameworks to prevent such misuse. As Raskin points out: “It’s not enough to make the technology. Every time you invent a technology, you also invent a responsibility”. Resolving these ethical challenges will require a great deal of thoughtful consideration and debate as this nascent field continues to evolve. But one thing is clear: the actions we take now will have far-reaching implications for the future of human-animal relations. “The last thing any of us want to do is be in a scenario where we look back and say, like Einstein did, ‘If I had known better I would have never helped with the bomb’”, adds Gero.

It is also worth noting that the interpretation of animal communication is not an exact science. As much as we may wish to believe otherwise, human perception is limited, and we may never fully grasp the nuances and complexities of the ways in which animals communicate. Even the most advanced AI models are ultimately reliant on human-labelled training data, which means that our own biases and misunderstandings can inadvertently shape the way we interpret animal behaviour. “I’ll write that the bats are fighting over food, but maybe that’s not true”, explains zoology professor Yossi Yovel. “Maybe they’re fighting over something that I have no clue about because I am a human. Maybe they have just seen the magnetic field of the earth, which some animals can”. Does this mean that we will never be able to have a real back-and-forth conversation with animals? Bakker seems to think so. “We may be able to do simple things, like issue better alarm calls or better interpret the sounds of other species”, she says. “But I don’t think we’re going to have a zoological version of Google Translate available in the next decade”.

Closing thoughts

Despite some of these challenges, the potential benefits of AI-powered animal communication are too great to ignore. By listening more closely to the voices of the creatures with whom we share this planet, we have the opportunity to foster a deeper sense of empathy and understanding. We may never be able to ask a whale about its day or a cat about its dreams, but by opening our minds and hearts to the possibility of communication, we can begin to build bridges across the species divide. In the end, the success of AI-powered animal communication will not be measured by the sophistication of our technology or the accuracy of our translations. Rather, it will be judged by the depth of our compassion and the strength of our resolve to create a world where all creatures are valued and respected. It is a daunting task, to be sure, but one that we cannot afford to shirk.