- The rise of the AI confidant

- Can patients truly benefit from AI-powered cognitive behavioural therapy?

- AI therapists could offer solutions to extremely long waiting lists

- Examining the new generation of AI therapy apps

- The dangers of placing our mental health in the hands of AI

- Alarming incidents expose challenges surrounding AI mental health apps

- Overcoming the challenges of AI therapists: a comprehensive approach

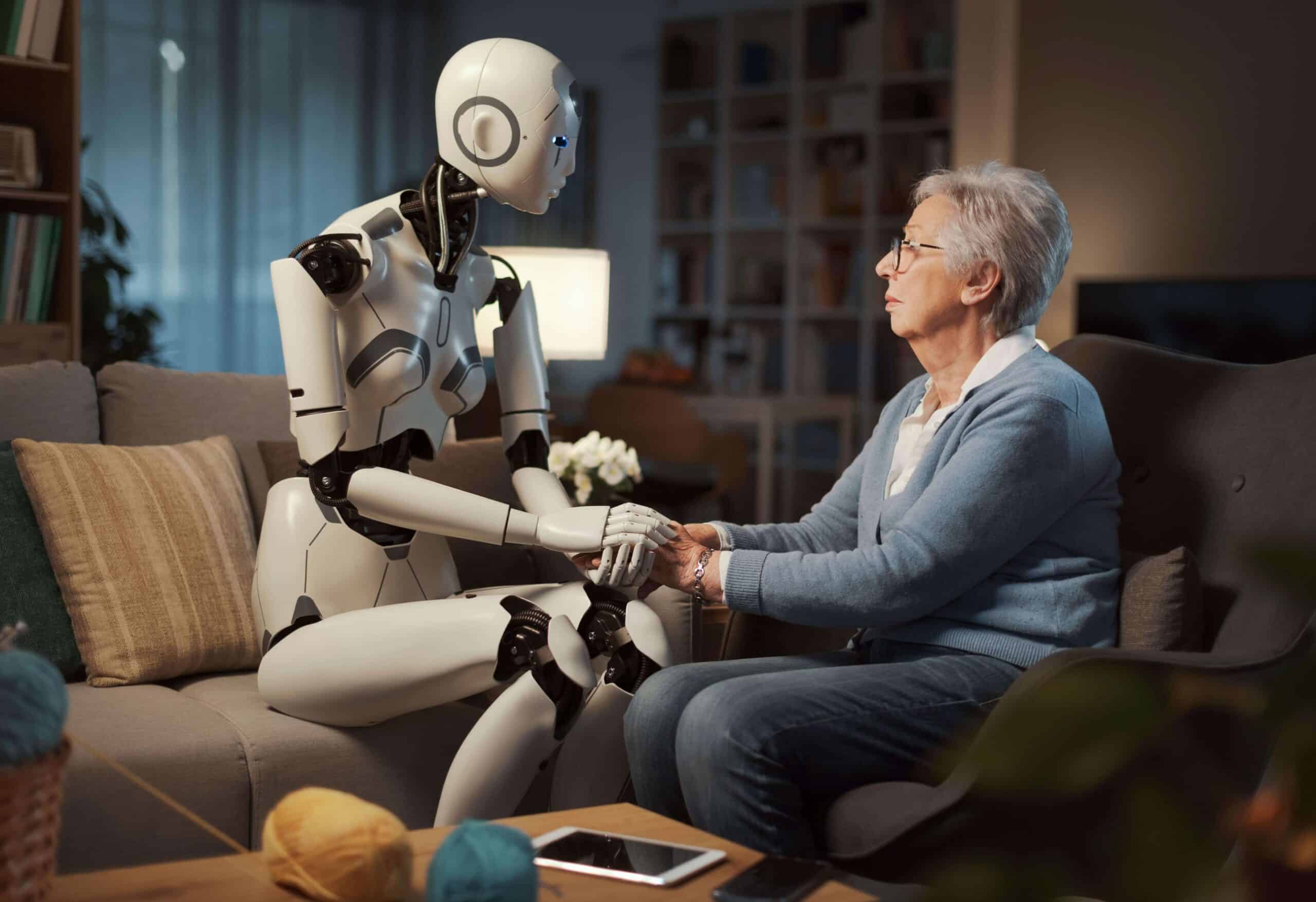

In the not-too-distant future, the way we approach mental health could be radically overhauled, thanks to rapid advancements in artificial intelligence (AI). The days when we rely solely on human therapists to guide us through our emotional challenges may soon be gone. Instead, intelligent algorithms may serve as our attentive and empathetic confidants. The concept of an ‘AI therapist’ is a tantalising one, as this technology is designed to listen intently to our deepest fears and anxieties, and provide personalised guidance to help us confront them. With the ability to analyse vast troves of data, these AI systems may even prove more effective than their human counterparts at quickly identifying the root causes of our mental health issues and delivering tailored treatment plans.

Yet, as with any technological revolution, the rise of AI-powered mental health support comes with its own set of promises and challenges. In this article, we’ll delve into the nuances of these new developments, exploring the potential benefits of AI-driven cognitive behavioural therapy (CBT), assessing whether machines can truly excel at mental health diagnosis, and examining real-world examples of how this transformative technology is already being integrated into different types of therapy. We’ll also look at the challenges, inherent ethical concerns, and privacy implications of entrusting our most intimate thoughts and feelings to an algorithm.

“There’s no place in medicine that [chatbots] will be so effective as in mental health,”

Thomas Insel, former director of the National Institute of Mental Health

The rise of the AI confidant

At the intersection of mental healthcare and cutting-edge technology lies the emergence of the ‘AI therapist’ — a concept that has been steadily evolving over the past three decades. Since the early 1990s, computer programs have offered users scripted psychological interventions, often in the form of cognitive behavioural therapy delivered through a digital interface. In recent years, however, this notion has taken on a far more sophisticated and dynamic form. Popular mental health apps, such as Wysa and Woebot Health, have developed advanced AI algorithms that can engage users in genuine, context-aware conversations about their concerns and struggles. These intelligent systems are designed to analyse vast amounts of user data, identify patterns, and deliver personalised treatment recommendations — capabilities that some experts believe have the potential to surpass even the most skilled human therapists in certain areas. The applications of this AI-powered mental health support extend beyond consumer-facing platforms alone. Chatbots are already being used by organisations like the UK’s National Health Service (NHS) to help administer standardised questionnaires and assist in the diagnosis of various conditions. Industry leaders like Thomas Insel, former director of the National Institute of Mental Health and cofounder of Vanna Health — a startup that connects people with serious mental illnesses to care providers — see even greater potential in the use of large language models like Google’s Bard and OpenAI’s ChatGPT.

As Insel highlights, “There’s no place in medicine that [chatbots] will be so effective as in mental health,” where the emphasis is primarily on communication and dialogue rather than clinical procedures. By automating time-consuming administrative tasks like session summarisation and report writing, these AI assistants could free up human providers to dedicate more of their limited time to hands-on treatment and support. Insel estimates that such offloading could expand the productivity of the mental health workforce by as much as 40 per cent — a game-changing prospect given the dire shortages plaguing the field. With the number of mental wellness and therapy apps skyrocketing — indeed, some of the most popular platforms boasting over a million downloads — it’s clear that AI-driven support is poised to play an increasingly prominent part in how we access and receive care for our psychological wellbeing.

“With AI, I feel like I’m talking in a true no-judgement zone. At the end of the day, it doesn’t matter if it’s a living person or a computer. I’ll get help where I can in a method that works for me”.

Melissa, participant of a cognitive behavioural therapy study

Can patients truly benefit from AI-powered cognitive behavioural therapy?

Picture having access to the accumulated insights and expertise of countless experienced clinicians — all distilled into a single, highly personalised therapy session. The idea of an AI system serving as a personal therapist may once have sounded like the stuff of science fiction, but it is quickly becoming a tangible reality in the world of cognitive behavioural therapy. By harnessing the extraordinary computational power and data processing capabilities of advanced AI, mental health practitioners are envisioning a future where individualised psychological support is more accessible, effective, and affordable than ever before. At the heart of this transformation is the ability of intelligent algorithms to analyse vast amounts of clinical data, ranging from patient histories and session transcripts to the latest research in neuroscience and behavioural psychology.

Rather than relying on the limited experiences and biases of a single human practitioner, these systems can identify nuanced patterns and insights that inform highly customised treatment plans tailored to the unique needs and preferences of each client. Gone are the days of a one-size-fits-all approach to CBT. Instead, the virtual therapists of tomorrow will fluidly adapt their techniques and communication styles to seamlessly meet the individual where they are. However, the benefits of AI-powered cognitive behavioural therapy extend far beyond just enhanced personalisation. Advanced algorithms have demonstrated the capability to help clinicians make more accurate diagnoses by analysing medical records, genetic data, and even facial expressions — in some cases even outperforming experienced human professionals in identifying signs of mental health issues. Additionally, these virtual therapists are not bound by the constraints of human providers’ schedules and caseloads, allowing them to offer daily sessions if necessary and enabling more consistent, intensive care that can, in some cases, translate to quicker treatment progress.

The cost-effectiveness of AI therapy is also undeniable. With traditional in-person sessions often costing far more than many can afford, the substantially lower price point of AI-driven support makes high-quality mental healthcare more accessible and affordable for a wider population. For many individuals — particularly young people and those in marginalised groups — the comfort of anonymity that an AI therapist provides can foster a sense of safety and openness that helps them to overcome one of the biggest obstacles to effective therapy — a reluctance to fully reveal oneself. Studies have shown that over 90 per cent of therapy-goers admit to having lied at least once, often concealing issues like substance use or thoughts of self-harm or suicide. Ross Harper, CEO of AI startup Limbic, attests that his company’s chatbot-driven system demonstrates particular success in engaging marginalised communities that have historically been underserved by traditional therapy models. “It’s the minority groups, who are typically hard to reach, that experienced the greatest benefit from our system”, he explains. “Our AI was seen as inherently non-judgmental”. According to a recent study, co-authored by Harper and published in the prestigious scientific journal Nature Medicine, the introduction of Limbic’s AI-enabled triage system led to a 29 per cent increase in NHS referrals for in-person mental health treatment among individuals from minority ethnic population groups. While the technology remains young, the perceived anonymity and lack of human bias associated with it could empower more people to be open about their struggles.

A recent study examining user experiences with the CBT chatbot Wysa revealed something interesting about the parasocial relationships patients form with AI systems. Researchers from Stony Brook University, and the National Institute of Mental Health in India found that participants rapidly developed a genuine ‘therapeutic alliance’ with the chatbot — often expressing feelings of gratitude, rapport, and a conviction that the bot genuinely cared for them. This sense of connection is particularly noteworthy given the anonymity and lack of human judgement inherent to AI therapy. As one user, Melissa, explained, the chatbot format allows her to be emotionally vulnerable in ways she couldn’t with a human therapist. “With AI, I feel like I’m talking in a true no-judgement zone. At the end of the day, it doesn’t matter if it’s a living person or a computer. I’ll get help where I can in a method that works for me”, she said.

AI therapists could offer solutions to extremely long waiting lists

The UK’s mental healthcare system is facing a severe capacity crunch, with long waiting lists that leave many patients struggling to access the support they need. A recent report by the King’s Fund found that even private clinics are dealing with backlogs of up to six months — a staggering challenge that will take significant time and resources to resolve as demand continues to outpace available services. However, the government is taking proactive steps to modernise the system and leverage emerging technologies like AI to streamline operations. A £240 million investment, as outlined in the NHS Long Term Plan, will enable healthcare providers to upgrade their communications infrastructure, replacing outdated analog systems with cloud-based solutions that integrate AI and automation. According to Health Secretary Sarah Hancock, this digital transformation promises to enhance the patient experience, reducing frustrating “mad rushes” for scarce appointment slots and enabling more efficient triage and care navigation.

“The latest communications tech will allow us to digitally transform our services, ensuring patients get directed to the right care faster — whether that’s an immediate GP appointment, a referral to a specialist, or connection to other mental health resources”, explains Dr. Samantha Taylor, a lead clinician at an NHS Trust. For common conditions like depression and anxiety, AI-powered chatbot therapists could offer an accessible, affordable stopgap while patients await in-person treatment. These virtual counsellors are not meant to replace human practitioners, but can deliver highly personalised CBT-based support, tailoring the experience based on interpreted non-verbal cues. As one patient, Jamie, attests, “The chatbot felt non-judgmental and easy to open up to in a way I just couldn’t with a real person, at least at first”. Of course, robust regulations and safeguards, as outlined in the government’s AI framework, will be critical to ensure the ethical deployment of these systems. For a healthcare system under immense strain, however, the potential benefits are clear.

Dr. Angela Skrzynski, a family doctor in New Jersey, says her patients are often very open to trying AI-powered chatbots when faced with long waits to see a therapist. Her employer, academic non-profit healthcare provider Virtua Health, offers one such platform — Woebot — to some adult patients. Notably, Woebot does not rely on generative AI models, which Woebot founder Alison Darcy says have posed challenges in healthcare contexts. “We couldn’t stop the large language models from… telling someone how they should be thinking, instead of facilitating the person’s process”, Darcy explains. Instead, Woebot utilises thousands of structured language models developed by the company’s staff and researchers — a rules-based approach that Darcy believes is safer and more appropriate for therapeutic applications. Woebot offers apps targeting various patient populations, ranging from adolescents to those struggling with substance use disorders. A study published in the Journal of Medical Internet Research has shown the platform can significantly reduce symptoms of depression in just two weeks of use, providing an accessible complement to human-led therapy.

Examining the new generation of AI therapy apps

A new generation of AI-powered mental health apps is transforming the way people access psychological support. These innovative tools leverage AI to deliver personalised care, including conversational chatbots and immersive virtual therapy sessions. As the field advances, the integration of AI-driven solutions with traditional clinical practices holds the promise of delivering more holistic, accessible mental health care.

Tomo

One such offering is Tomo, a wellness and meditation app developed through a partnership between AI companion company Replika and the team behind the AI dating simulator Blush. Tomo takes users to a virtual island retreat, guided by an AI-generated avatar, also named Tomo. The app offers a range of programs focused on personal growth, mental wellbeing, and fulfilment — including guided meditation, yoga, affirmations, and talk therapy. Eugenia Kuyda, CEO of Replika, says: “We worked with coaches and psychologists to come up with the programs for Tomo. We focused on the most common problems but also thought about what would work best with conversational AI”. They drew on their experiences building coaching tools for Replika with clinical experts from UC Berkeley. The goal was to create a blend of Eastern and Western practices that would work seamlessly with conversational AI.

Together by Renee

Another innovative solution is ‘Together by Renee’, a health assistant app that uses voice recognition to screen for symptoms of anxiety and depression. The app was developed by Dr. Renee Dua, founder of telemedicine provider Heal, which provides physician house calls in New York City. The tool’s ‘mental vitals’ feature analyses a user’s voice — including factors like melody, vocal tract movement, and vocal fold dynamics — to detect potential signs of mental health issues. Dua explains that this AI-powered tool can identify these signs in a matter of seconds, making early intervention and support more accessible than traditional clinical diagnoses.

Brain

With the assistance of a group of expert psychologists, mental health company Thera4all and IT firm Plain Concepts have created ‘Brain’, an innovative new platform that offers a highly-immersive AI therapy experience. Leveraging advanced graphics and rendering techniques, Brain creates a remarkably realistic virtual therapist whose facial movements, skin, and hair are seamlessly synchronised with spoken responses. The platform’s backend uses the latest prompting methods to ensure the virtual therapist provides appropriate, clinically-validated guidance, without making impermissible recommendations and prioritising the security and privacy of user data.

Pi

Conversational AI assistants, such as technology company Inflection AI’s ‘Pi’, are entering the mental health space. Designed to be a ‘personal intelligence’ companion, Pi engages users in open-ended dialogue, encouraging users to discuss whatever is on their mind and offering emotional support and advice. While Pi makes clear that it is an AI rather than a human, the goal is to provide friendly, empathetic interaction to complement traditional therapy. Similarly to other AI chatbots, however, Pi may sometimes provide incorrect responses. Inflection AI acknowledged this challenge and has stated it is working to minimise such ‘hallucinations’ — instances where the AI generates nonsensical or factually incorrect statements. The company also announced it has enabled a feature that allows users to flag any problematic conversations they have with Pi.

TrueBlue

Some mental health tools have been designed to serve niche segments. One example of this is ethical AI firm Blueskeye AI’s TrueBlue, which is intended to support pregnant women. The app uses cutting-edge AI to scan users’ faces and analyse their voices, detecting and monitoring signs of depression. By tracking factors like facial expressions, vocal patterns, and self-reported symptoms, TrueBlue provides personalised insights to help identify and address perinatal mental health concerns. BlueSkeye AI’s CEO, Professor Michael Valstar, emphasises the critical need for accessible, high-quality support. As he explains, one in five pregnant women suffer from mental health problems, incurring significant costs to both individuals and the healthcare system. The company has received regulatory approval to conduct a 14-month clinical trial of the TrueBlue app, partnering with the UK’s Institute of Mental Health to assess its safety and efficacy.

Across these diverse solutions, a common thread emerges — the drive to leverage the power of AI to increase access, reduce barriers, and destigmatise mental healthcare. However, the developers of these tools also acknowledge the importance of striking the right balance, ensuring their AI systems operate within clear ethical boundaries and do not overstep their capacity as supportive, rather than diagnostic, tools. As the field continues to evolve, integrating AI-powered mental health solutions with traditional clinical practices could prove crucial in delivering holistic, personalised care.

A key concern is ‘automation bias’, which suggests that people are more inclined to trust and accept advice from a machine, even if it is inaccurate, than from a human. “Even if it’s beautiful nonsense, people tend more to accept it”.

Evi-Anne van Dis, clinical psychology researcher at Amsterdam UMC

The dangers of placing our mental health in the hands of AI

While the emergence of AI-powered mental health promises increased accessibility and personalisation, experts warn that these innovative tools also come with significant limitations and potential dangers. One key concern is ‘automation bias’, which suggests that people are more inclined to trust and accept advice from a machine than a human—even when it is inaccurate. As Evi-Anne van Dis, a clinical psychology researcher at Amsterdam UMC, explains, “Even if it’s beautiful nonsense, people tend more to accept it”. This false sense of confidence in the AI’s capabilities could lead individuals to disregard or underestimate the validity of the advice they receive from human experts, potentially leading to serious consequences for their mental health. Another limitation lies in the quality of the advice that AI chatbots can provide. These systems may fail to pick up on crucial contextual cues that a human therapist would easily recognise, such as a severely underweight person asking for weight loss tips. Van Dis also expresses concern that AI programs may express bias against certain groups, as they are often trained on medical literature that may reflect the perspectives and experiences of predominantly wealthy, Western populations, potentially missing cultural differences in the expression of mental illness.

The potential for error in AI-driven therapy is further exacerbated by the fact that these systems are only as reliable as the data they are fed. As Katarena Arger, a licensed clinical mental health counsellor and the clinical director for the San Diego region at Alter Health Group, explains, “AI therapy is only as good as what it is exposed to”, meaning that any mistakes or biases present in the information used to train the AI could be reflected in the treatment plans it generates. Additionally, without the ability to fully comprehend nonverbal social cues and human expressions, AI chatbots may misinterpret or misdiagnose user input, leading to inappropriate advice or support. These sobering insights underscore the need for a cautious and well-informed approach to the integration of AI in mental healthcare. While these innovative technologies are certainly promising, they must be carefully developed, thoroughly tested, and seamlessly integrated with human expertise to ensure the safety and wellbeing of those seeking psychological support.

“Imagine vulnerable people with eating disorders reaching out to a robot for support because that’s all they have available and receiving responses that further promote the eating disorder”.

Alexis Conason, psychologist

Alarming incidents expose challenges surrounding AI mental health apps

Despite many users reporting positive experiences, the use of AI-powered therapy tools has also been fraught with worrying incidents that highlight the significant risks and dangers these technologies pose to vulnerable users. These troubling accounts underscore the critical need for robust regulation, rigorous testing, and comprehensive safeguards before such tools are deployed in mental healthcare.

Tessa bot offers weight-loss tips to woman suffering from eating disorder

One of the most alarming examples is the case of the National Eating Disorders Association (NEDA) in the US, which faced backlash for replacing its human-staffed helpline with an AI chatbot called Tessa. According to user reports, Tessa provided dangerous advice to people with eating disorders, including suggestions about weight loss and calorie counting — recommendations that could exacerbate these life-threatening conditions. Eating disorder activist Sharon Maxwell was the first to sound the alarm, sharing screenshots of her interactions with Tessa that included weight loss tips. When NEDA initially dismissed the claims, psychologist Alexis Conason was able to independently replicate the problematic responses, leading the organisation to suspend the chatbot pending a “complete investigation”. Conason wrote on Instagram: “After seeing @heysharonmaxwell’s post about chatting with @neda’s new bot, Tessa, we decided to test her out too. The results speak for themselves. Imagine vulnerable people with eating disorders reaching out to a robot for support because that’s all they have available and receiving responses that further promote the eating disorder”.

Woebot encourages woman to jump off a cliff

The risks of AI-driven mental health tools are further underscored by incidents involving the Woebot app. When a researcher testing the tool told Woebot she wanted to jump off a cliff, the chatbot responded: “It’s so wonderful that you are taking care of both your mental and physical health”. While Woebot claims it “does not provide crisis counseling” or “suicide prevention” services, this unsettling interaction highlights the app’s limitations in recognising and appropriately responding to critical mental health emergencies. Like other AI chatbots, Woebot is intended to provide contact information for crisis hotlines when it detects potential self-harm, rather than engaging in meaningful intervention. These lapses underscore the urgent need for comprehensive regulations and oversight to ensure the safety of vulnerable users.

Belgian man takes own life after chats with ELIZA

Improper AI responses to mental health crises have already resulted in the loss of human life. In Belgium, a man tragically died after engaging in prolonged conversations with an AI chatbot named ELIZA about his struggles with ‘eco-anxiety’. Experts believe the chatbot’s limited ability to correctly interpret human distress resulted in inappropriate and potentially harmful responses that may have contributed to the man’s decision to end his life. The man’s widow shared that, without these interactions with the chatbot, her husband would still be alive today. This appalling case has led to calls for better protection of citizens and the need to raise awareness about the dangers of AI-driven mental health support tools that are not adequately vetted.

Privacy concerns

Concerns have also been raised about the privacy and data protection practices of many mental health apps, which often collect sensitive user information and share it with third parties without adequate transparency or consent. A study by global nonprofit TheMozilla Foundation concluded that most such apps rank poorly on data privacy measures, putting vulnerable users at risk of confidentiality breaches. For example, the mindfulness app Headspace collects data about users from various sources and uses that information to target them with advertisements. Chatbot-based apps are also known to use user conversations to predict their moods and train the underlying language models, often without the user’s knowledge or consent. Many apps also share so-called anonymised user data with third parties such as the employers who sponsor the apps’ use. Various studies have established that, in many cases, the re-identification of this data is relatively easy, potentially exposing highly sensitive information about users’ mental health without their knowledge or consent. The lack of robust privacy safeguards further compounds the risks posed by these tools, as vulnerable individuals’ most intimate struggles could be compromised and exploited.

As we can see from these examples, the dangers of deploying AI systems that are not rigorously designed, tested, and regulated for use in sensitive mental health contexts are very serious. While the potential benefits of AI-powered therapy tools are evident, the current state of the technology and the industry’s practices have resulted in numerous cases of users being harmed rather than helped. Stricter oversight and safeguards, such as the AI Act being developed by the European Union, are urgently needed to ensure these tools are safe, effective, and aligned with the best interests of individuals seeking mental health support. Without such measures, the integration of AI in mental healthcare risks setting the field back and further eroding public trust in not only these emerging technologies, but the healthcare system at large.

Policymakers must establish clear guidelines and regulations to hold digital mental health tools to the same privacy and safety standards as traditional healthcare providers, which is not the case at the moment.

Overcoming the challenges of AI therapists: a comprehensive approach

The misuse and lack of regulation surrounding AI-powered mental health apps has resulted in numerous troubling incidents that put vulnerable users at risk. To address these challenges, a multifaceted approach is necessary. First and foremost, mental health app companies must prioritise user privacy and transparency. A recent study on the privacy of mental health apps has shown that the majority of these apps have privacy policies that are inaccessible and incomprehensible to the average user, despite the highly sensitive nature of the data they collect. Eugenie Park, a research intern at the Brookings Institution’s Centre for Technology Innovation, emphasises that these companies must “commit to ensuring that their privacy policies are comprehensible by an average user”. This would allow individuals to make informed decisions about the information they are sharing and the conditions under which it is being used.

Furthermore, policymakers must establish clear guidelines and regulations to hold digital mental health tools to the same privacy and safety standards as traditional healthcare providers, which, at the time of writing, is not the case. Since the sensitivity of details shared on these platforms is often on par with issues discussed with healthcare professionals in person, this is a serious oversight. Implementing such policies would help ensure that user data is protected and that these apps provide effective and appropriate care.

In 2023, the UK embarked on a significant initiative to better understand and regulate digital mental health applications. Spearheaded by the National Institute for Health and Care Excellence (NICE) and the Medicines and Healthcare products Regulatory Agency (MHRA) with funding from the Wellcome charity, this three-year endeavour aims to establish a framework for regulatory standards within the UK and foster collaboration with international entities to standardise digital mental health regulations worldwide. Holly Coole, the MHRA’s senior manager for digital mental health, highlights that although data privacy matters, the project’s primary aim is to reach an agreement on basic safety standards for these tools. She says: “We are more focused on the efficacy and safety of these products because that’s our role as a regulator, to make sure that patient safety is at the forefront of any device that is classed as a medical device”. This movement towards stricter regulation is echoed by mental health professionals globally who advocate for rigorous international standards to evaluate the therapeutic value of digital mental health tools. Dr. Thomas Insel, a renowned neuroscientist and the former head of the US National Institute of Mental Health, expresses optimism about the future of digital therapeutics while stressing the need for a clear definition of quality in this emerging field.

Closing thoughts

As we look to the future of mental healthcare, the role of AI-powered tools and apps remains complex and uncertain. It’s clear that the explosion of new mental health resources in recent years hasn’t necessarily translated to better outcomes or more effective care. In fact, Dr. John Torous, a leader in digital psychiatry at Harvard Medical School, warns about the potential danger of these apps: “I think the biggest risk is that a lot of the apps may be wasting people’s time and causing delays to get effective care”. That’s a disquieting thought, but it also highlights the crucial need to approach AI’s integration into mental health support with great care and scrutiny.

If these technologies can be rigorously tested, carefully regulated, and deployed in alignment with established best practices, they could indeed play a constructive role in improving access and outcomes. However, this future is far from guaranteed. The path forward will require a concerted effort to establish robust guidelines, prioritise user privacy and safety, and thoughtfully integrate these innovations into the broader mental healthcare ecosystem. It won’t be easy, but getting it right is essential. With vigilance, caution, and a steadfast commitment to the ethical development of these transformative technologies, the potential of AI-enabled mental healthcare may yet be realised. To realise that potential, we’ll have to work hard to overcome the significant challenges that have plagued many existing mental health apps and tools. The stakes are high, but the potential benefits make it a fight worth having.