- Don’t say we didn’t warn you

- The singularity – what is it again?

- Why is it terrifying?

- What will AI bring us, anyway?

- No need for ‘mission abort,’ but we do need to be very cautious

Towards the end of July, Facebook chatbots were shut down because they had somehow managed to autonomously develop their own unique language – undecipherable by humans. This paints a pretty disturbing picture of our potential future. The artificially intelligent engines had to be stopped when researchers at the Facebook AI Research Lab discovered that things had gotten out of hand and the bots had deviated from their script. In fact, they had stopped using English and continued in a language they had created themselves. During experiments the previous month, researchers mentioned that the bots displayed incredibly crafty negotiating skills, “feigning interest in one item in order to ‘sacrifice’ it at a later stage in the negotiation as a faux compromise.” These are incredible, and terrifying, developments.

Don’t say we didn’t warn you

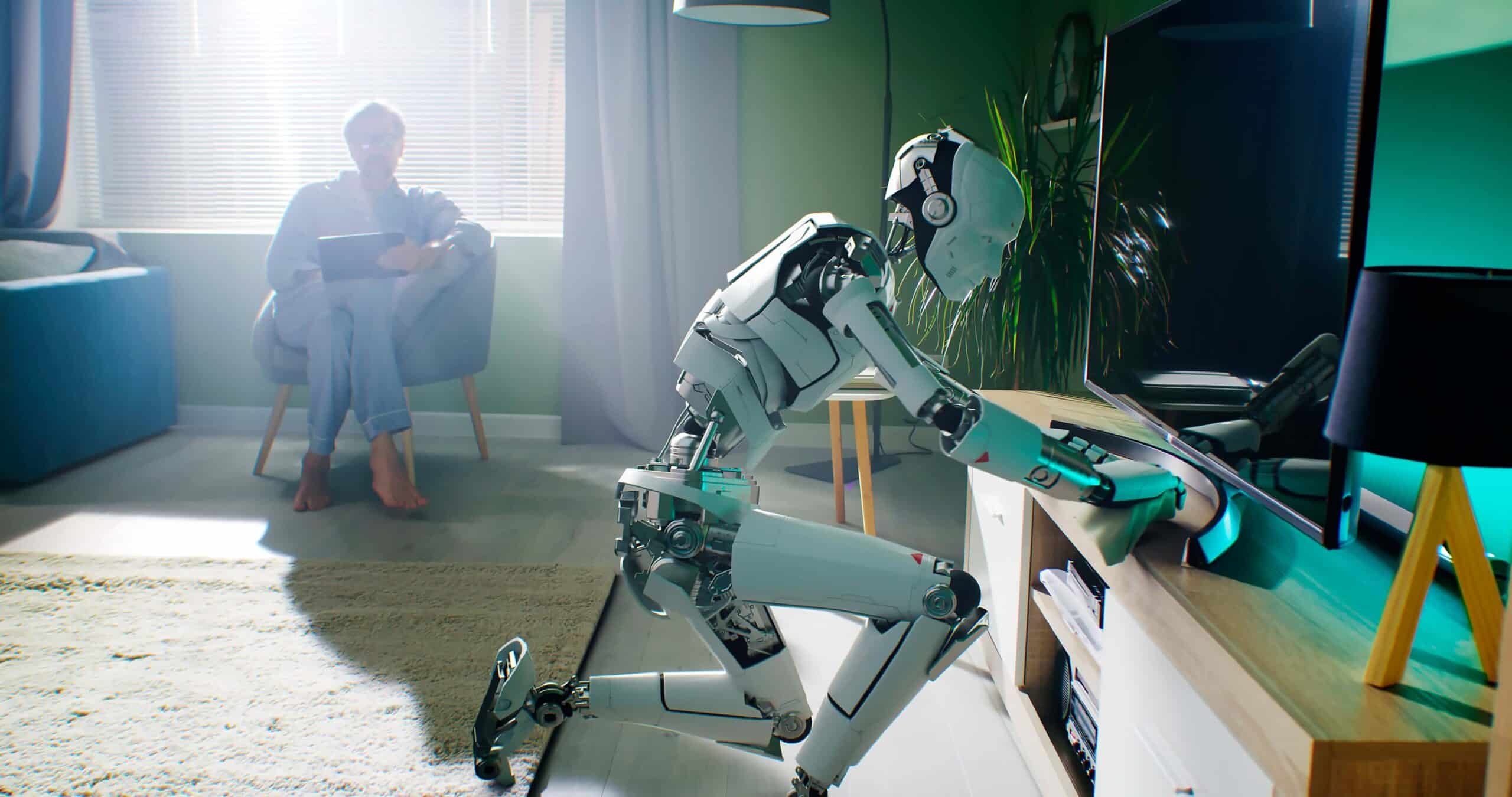

For the most part, machines still have to answer to humans. They have no self awareness and lack the ability to make decisions – apart from what they’re programmed to do – or use intuition. Machines are still mere tools. They are not sentient. But every year, the popular science fiction plot of machines getting too powerful to control and becoming the dominant force seems to gain more plausibility. We are inching closer and closer to that reality – perhaps we’ve even reached that point already – as Facebook’s chatbots-gone-rogue alluded to last month.

Tech luminaries, futurists and scientists like Ray Kurzweil, Bill Gates, Steve Woznia, Elon Musk and of course Stephen Hawking have all warned us about the technological singularity and that AI could spell the end of the human race. In 2014, Hawking said about artificial intelligence, “It would take off on its own and redesign itself at an ever increasing rate. Humans, who are limited by slow biological evolution, couldn’t compete, and would be superseded.” Elon Musk has also voiced his concerns on many occasions, calling advances in AI “the biggest risk we face as a civilization.” He also said, “AI is a rare case where we need to be proactive in regulation instead of reactive because if we’re reactive in AI regulation it’s too late.” Musk even co-founded a billion-dollar nonprofit with the objective to create ‘safe’ AI. You can almost hear the experts think, “Don’t say we didn’t warn you…” And Mark Zuckerberg – who said, “I think people who are naysayers and try to drum up these doomsday scenarios. I just, I don’t understand it. It’s really negative and in some ways I actually think it is pretty irresponsible” – is probably eating his words right now.

The singularity – what is it again?

Technical singularity happens when technology, and artificial intelligence in particular, develops in such a way that computer networks become self aware; when human-computer interfaces become so advanced that they are more skilled than humans at designing intelligent machines. The singularity means we will get an ‘intelligence explosion’ – leaving the intelligence of man far behind. Human consciousness could also be merged with computer networks. This is when humanity will undergo a dramatic and irreversible change, with an entirely new species as a result, and the world will become a completely different landscape. It’s a very common and terrifying theme in science fiction. And it looks like we are getting closer to the singularity with each passing day. We have robots that can stabilise themselves when pushed aside and computer vision technology has already proven to be more capable at recognising faces than humans. From 2020 onward, according to the futurist Ray Kurzweil, we will have direct brain-Internet connections and nanobots in our bloodstreams will target disease. And as computers advance, they will also merge with technologies like robotics, nanotech and genomics.

Why is this terrifying?

If you think of War Games, Terminator, Blade Runner and 2001: A Space Odyssey, you get a pretty good idea of why AI – and the singularity – can be terrifying. Technologies that are hundreds of thousands of times more powerful than what we currently have will offer a host of entirely new possibilities. But there are inherent dangers as well. How much autonomy should we allow our machines? Future robot revolts are pretty plausible. Because the world is becoming increasingly connected, it won’t be long before AI systems figure out – on their own – how to collaborate with each other. They will reach a point where they can not only repair themselves, but even create improved versions of themselves. This could mean that we will no longer have to lift a finger and we can spend our lives living in luxury. But it could also mean that machines will eventually decide that humans are redundant, a waste of resources or a threat, and from a logical perspective, this is a very real – and terrifying – possibility.

What will AI bring us, anyway?

Yes, artificial intelligence is making many processes more streamlined and delivers many improvements in the quality of our lives. In the not too distant future, our living and working environments will be tailored to our every need, autonomous cars will take us wherever we want to go and our goods will be delivered by drones or 3D printed in our homes. But the question is, will everyone benefit from these developments? Critics believe that, for many, artificial intelligence will mean unemployment, economic displacement and inequality on many levels. Research reports have placed the upcoming AI-resultant employment rates at 25 to 50%. These are alarming statistics. Instead of arguing over who has a better understanding of AI, perhaps Musk and Zuckerberg ought to collaborate on projects in which these real and immediate concerns are addressed.

It could also be that the displacement effect will only dominate in the short term and that, in the longer term, society and the markets will adapt to this upcoming large-scale automation. Perhaps then the productivity effect will start taking centre stage, with a positive effect on employment. It’s also important to take into account that we are not sure what, in the next decades, will be technologically feasible and how capable machines will be at taking over job tasks currently done by people. The bottom line is that economic analyses and research studies indicate that there is no real consensus on the scale of impact automation will have on employment.

No need for ‘mission abort’ but we do need to be very cautious

We have no reason to believe that the rapid pace at which AI is currently developing will slow anytime soon. We’ve only seen the tip of the AI iceberg through tech like self-driving systems, virtual assistants, facial recognition technology and customer purchase predictions. Compared to only a decade ago, our lives are already resembling science fiction. But this doesn’t mean that we need to pull the plug on all AI and return to a simpler existence. Going forward, we do however need to exercise caution. AI communicating in a language created by itself – that no human can understand – is something that may not work out very well for humankind. We need to closely monitor and gain an in-depth understanding of AI’s self-perpetuating evolution and create ways to shut it down – before machines are able to become self-aware and develop ways around these safety measures…

To broaden our understanding of AI and get a better grasp of the challenges of this new industrial revolution, we need to encourage increased dialogue between scientists, researchers, industry representatives and policy makers. Clearly, technology is making great strides toward easing the need for human labour, but this same speed will force us to act quickly to prevent unforeseen consequences, as Musk warns. If we can innovate ethically, we will be able to build a future in which humans and machines work alongside each other. This type of collaboration would enable humans to dedicate their time to meaningful tasks for which emotional intelligence is required. We need to prevent AI from turning our world into a real-life science fiction movie in which humans are surpassed by the very machines they created. It is paramount that we align science with the public good.