- Festo’s bionic hand is made from a material that’s as soft as human skin

- Robotic artist Ai-DA can draw subjects from real life

- The AI-powered Alter 3 sings opera and conducts a human orchestra

- A robot that expresses emotions through changes on its outer surface

- Robots and love: a match made in heaven

- Robots in the future will be more like humans and less like machines

When the first robots started operating in factories a few decades ago, they were big, dangerous to humans, and limited in their capabilities. But years of intense research and development in robotics are finally starting to bear fruit, and now, there’s a new breed of robots making their way into our lives. Robots today are designed to work alongside humans in a much more sensitive manner. And as they become more sophisticated, their applications extend far beyond freeing human workers from monotonous or dangerous work. In fact, a new generation of robots is more ‘human’ than ever before.

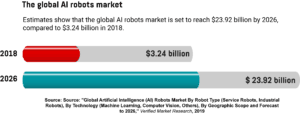

But there’s always room for scepticism, and many are worried that robot intelligence will eventually surpass ours. Some forms of robots equipped with artificial intelligence (AI) that can master complex human tasks and express feelings already exist, but that’s just the beginning. As the industry gets bigger, we can expect the development of even more innovative solutions. In fact, Verified Market Research predicts that the global AI robots market will reach $23.92 billion by 2026, compared to $3.24 billion in 2018.

Festo’s bionic hand is made from a material that’s as soft as human skin

The German automation firm Festo developed a robotic arm with a bionic hand that moves like a human one. BionicSoftHand, as it’s called, is covered with silicone skin that’s almost as soft as human skin. Unlike conventional robotic hands, it doesn’t have a stiff skeletal structure, minimising the risk of injury when it comes into contact with humans. The hand consists of “inflatable bellows covered in a skin made of elastic fibers for flexibility, making it able to mimic the movements of a human hand”. It’s also equipped with inertial, force, and touch sensors that allow it to be fast and precise when handling objects.

During testing, the robot was given an assignment to hold and reposition a 12-sided object to have a specific corner pointing upward. It completed this task successfully thanks to AI, learning from its mistakes to reach the best method. Currently, the robot can only handle objects, but the team at Festo is planning to improve the tech to be used in factories, car maintenance, or computer services, since “sometimes repairmen could really use an agile third hand to assist”.

Robotic artist Ai-DA can draw subjects from real life

While Festo’s solution could be helpful in assisting humans, some envision robots as more sophisticated machines, capable of producing art. In fact, these robots already exist, and one of them was developed by Aidan Meller, a gallery owner from the UK. He designed a life-like robotic painter that can draw portraits, called Ai-DA. It was named after Ada Lovelace, the British mathematician who created the world’s first computer program.

Ai-DA’s body was built by the UK-based robotics firm Engineered Arts. The robot’s head is covered with silicone skin, while its teeth and gums are 3D-printed. Thanks to its bionic eyes, equipped with cameras, Ai-DA can ‘see’ a person, isolate them from the background, and draw a portrait using ink or pencil. As Meller explains, it can create “very tight drawings” or “quite abstract” art. The plan for the future is to give the robot the ability to paint as well. What’s more, Ai-DA can engage with an audience, which means the robot can also be used for performances in public spaces. It will have its first public appearance at the Unsecured Futures exhibition that will be held at the University of Oxford in May 2019. Some of Ai-DA’s drawings will appear at another show, which will take place in London a few months later.

“We want to push the boundaries of what AI can do,” says Meller. “We’re using her to question how we are using AI today – are we just using it for profit, where it can have a damaging impact on humans … or are we using it for good?”

The AI-powered Alter 3 sings opera and conducts a human orchestra

Besides drawing, some robots are also good at singing. In early 2019, a humanoid robot called Alter 3 was introduced to the public at the New National Theatre (NNT) in Tokyo. The robot, developed by researchers from Osaka University and the University of Tokyo, can sing the opera and conduct a human orchestra. Powered by an AI neural network, the robot can autonomously move its fingers, head, arms, and upper torso, as well as make facial expressions. After its debut in Tokyo, this innovation was presented at the Hi Robot – Das Mensch Maschine Festival in Düsseldorf, and from May to August 2019, it will be showcased in London as well.

The New National Theatre is also on a mission to create an opera starring Alter 3. The show is still in the making and will be ready for premiere sometime in 2020. Alter 3 will perform together with a children’s choir, while performances by other opera singers, NNT’s choir, and the Tokyo Philharmonic Orchestra will also be included. Keiichiro Shibuya, who’ll be in charge of composing the music for the new opera, claims this is “something that has never been done in an opera before”.

According to Mahoro Uchida, NNT’s curator and producer of Alter 3 exhibits outside of Japan, “Ever since our first installment of Alter in 2016, it has provided surprise, profound emotion, and time for thought to our visitors while crossing the boundaries between machine and man, life and program.”

A robot that expresses emotions through changes on its outer surface

Over the past few years, there’s been a surge of robots that can copy human facial expressions like smiling or showing confusion. But some researchers have taken it one step further.

A team of scientists from Cornell University designed a test robot that can express its ‘mood’ by changing parts of its outer surface. Beneath the robot’s ‘skin’, there’s a grid of texture units, the shape of which changes based on the robot’s ‘feelings’. The researchers wanted to move away from human-based expression, and were instead inspired by animals, many of which use non-verbal cues to convey their emotions. Currently, the texture-changing skin features only two shapes: goosebumps and spikes. Though the robot doesn’t have a specific application, the scientists are happy they were able to prove that something like this can be done, and it could be the first step toward developing more sophisticated and advanced social robots.

Robots and love: a match made in heaven

Not only will future robots be able to express their emotions, they’ll also be able to love us. At least that’s what the New York-based designer and DJ Fei Liu believes. Realising how difficult it can be for people to find that special someone, Liu decided to create her own companion. Named Gabriel2052, the robot is being developed from open-source software and hardware. The reason why Liu is developing Gabriel2052 isn’t just to overcome loneliness with an ideal romantic companion, but also to inspire “women to learn new technical skills that would advance the representation of female sexuality in robotics”. The existing love robots industry is still dominated by male interests and perspectives, and Liu wants to change that.

To make her robot act more like a human, Liu is equipping it with a useful feature that will enable it to have unpredictable conversations with its human companion. To make this happen, Liu is creating an SMS-based chatbot by utilising a database of text messages exchanged between her and her ex-boyfriend. Based on those messages, the robot will be able to learn how to communicate with Liu. What Liu wants is a robot that would be able to challenge her, or even break up with her.

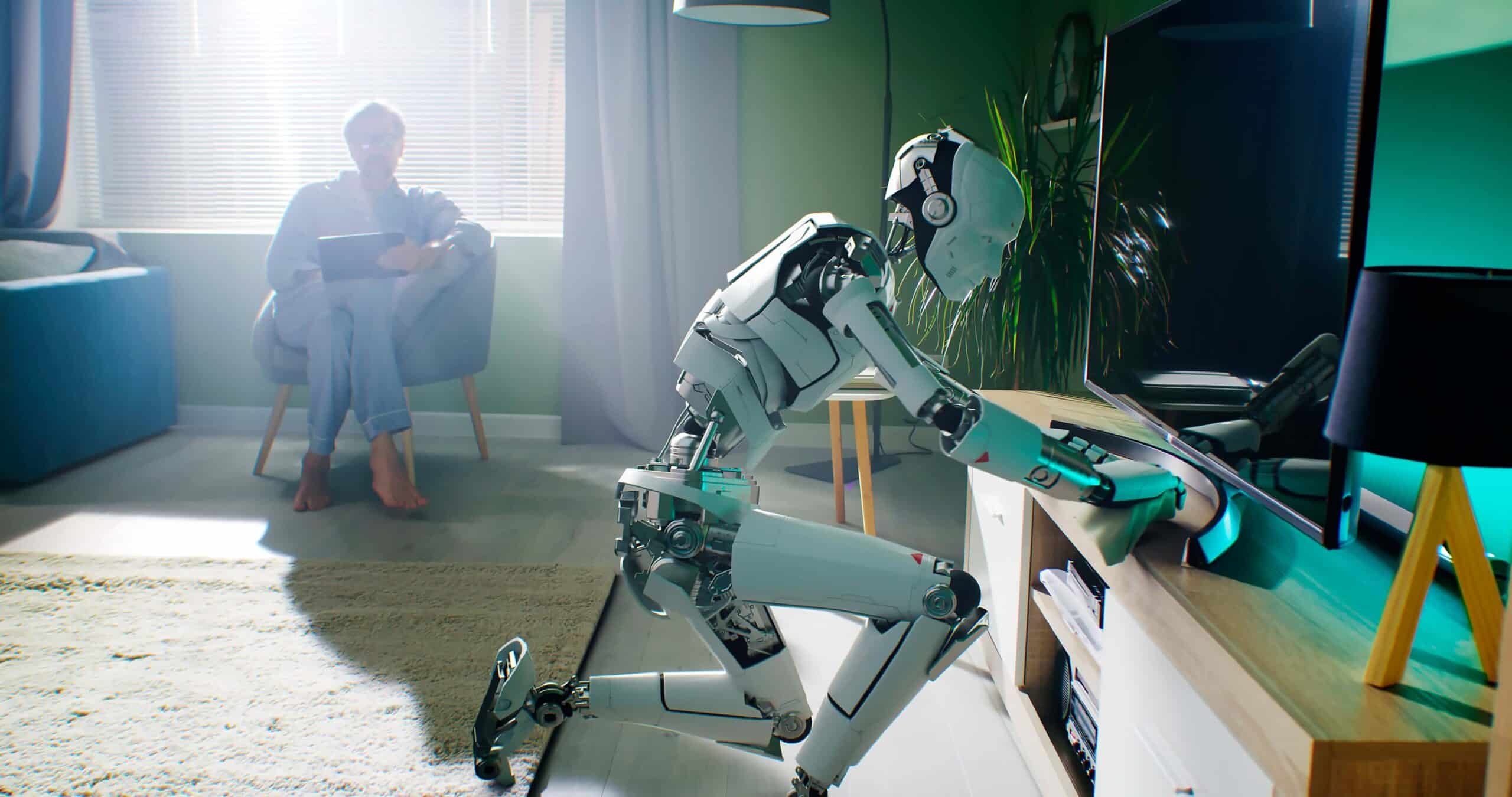

Robots in the future will be more like humans and less like machines

The adoption of robotics is expanding across multiple industries. And even though people’s attitudes towards this technology differ, advancements in robotics and machine learning continue to emerge. But what does this mean for the future? As these advances blur the boundaries between humans and machines, robots won’t just transform businesses, but our society in general. According to Guy Kirkwood, the chief evangelist at the software company UiPath, ultimately, “every person will have their own robot”.

But such robots would be radically different from current ones. Futuristic robots designed to mimic our behaviour will learn to excel at human tasks. With further improvements, these life-like robots will be able to accurately detect our emotions and respond accordingly, or even develop their own feelings. Though designers have already created some pretty impressive robotic solutions, the tech still needs to become a lot smarter, cheaper, and easier to use, so people are more willing to embrace it.