- Pepper customises its behaviour to suit your mood and entice you to buy

- Will robot prison guards be able to do a body search?

- Kanae Chihira is almost real – much too real, according to some

- What level of autonomy are we willing to give our humanoid robots?

- Teaching robots human values to enable them to make judgements

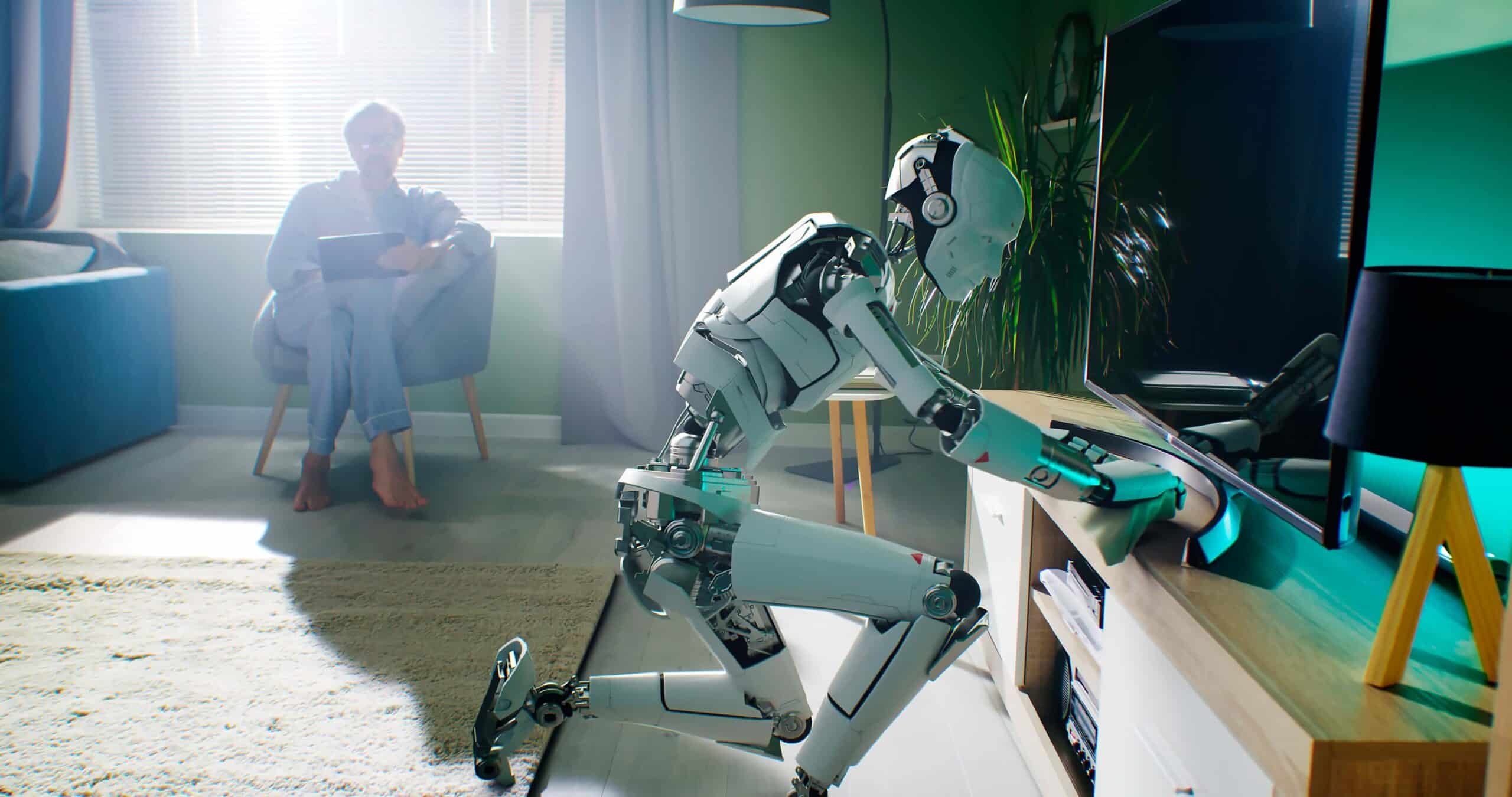

Humanoid robots sound like the stuff science fiction movies are made of. According to the European Union, however, within the next five years, they will have found their way into homes, shops, hotels, care facilities and even prisons around the world. Many different humanoid robots have already been developed and are being piloted in various environments. Equipped with sensors, cameras and touch screens, they fulfil rolls in hospitals and homes for the elderly. They hand out medication, serve meals, alert medical personnel in case of emergencies or just provide companionship. Humanoid robots can be used to read bedtime stories to your child or even to fetch them from school. They can show you around a store or guard inmates. Today’s humanoids are already so advanced that they can monitor a person’s behaviour and learn, through facial expression and voice recognition technology, when is the best time to approach someone. They can encourage socialisation by reminding someone to call a friend. All their features are highly customisable, even their tone of voice. Are these new humanoid robots going to replace our human caregivers, shop assistants and prison wardens? And will we be able to tell them apart from real humans?

1. Pepper customises its behaviour to suit your mood and entice you to buy

A good example of a humanoid robot already in use is Pepper, advanced AI developed by robotics giants SoftBank and Aldebaran.The robot stands 1.20 m tall and is able to detect and analyse facial expressions, communicate with people and provide assistance. Cute and looking like a toy, it rolls up to you and turns its head towards you when you talk. Pepper’s head is fitted with 2D and 3D cameras, sensors and multi-directional microphones. Its hands feature touch sensors while its mobile base is fitted with laser and sonar technology, gyroscopes and bumper sensors. The toy-like robot can be used in stores and hospitality businesses but also in schools and universities. Pepper is able to gain insights and customise its behaviour according to four different moods it can recognise: joy, happiness, anger and sadness. Over 100,000 Peppers have already been deployed in stores across Japan and Europe. They know how much time you spend at the store, which products you are interested in and what you’ve already purchased. Blinking its big eyes at you, Pepper will entice you to buy by saying things like: ‘We have a 30% discount on that laptop today.’ While impressive, some may however find all of this sophisticated tracking technology rather invasive and raise privacy concerns. The level of invasiveness however ultimately depends on how the end user – the business that deploys Pepper – uses the information of their customers.

2. Will robot prison guards be able to do a body search?

A prison in Pohang, South Korea, is currently field testing the world’s first robotic prison guards developed by the Asian Forum for Corrections, the Electronics and Telecommunications Research Institute and manufacturer SMEC. According to the Forum, the robot is the future of prison security. Equipped with 3D depth cameras and software designed to study and analyse human behaviour, it can report unusual situations to the control centre in real time. The prison robot is developed to alleviate the heavy workload of the prison wardens and to increase the safety and security of the inmates. It is able to protect prisoners from incidents such as assault, arson and suicide by detecting every detail of what happens inside a cell or communal area. Unusual occurrences or other anomalies are analysed by pattern recognition algorithms that focus on potential problematic behaviour.

In the event of a potential problem, the robot sends out alerts so that correctional officers can rush to the scene in time to prevent suspicious incidents from becoming real problems. Through the two-way wireless system of the robot, officers are also able to communicate with prisoners without having to leave the control room. Via navigation tags located along the ceilings of the corridors, the robot is able to patrol a prison independently, although it can also be manually controlled via an iPad. The authorities are optimistic that the prison robots will lead to increased safety and a decrease in labour costs. According to the designers, the next step in the development of this robot is one that can perform actual body searches. Although most admit that neither the prison system nor the technology is quite ready for that next step.

3. Kanae Chihira is almost real – much too real, according to some

Toshiba’s latest humanoid robot, Kanae Chihira, successor of Aiko Chihira and Junko Chihira, appears, talks and moves even more like a real human than her previous versions. Kanae blinks her eyes and moves her lips smoothly while speaking. She is programmed with various human-like facial expressions and can greet visitors in Japanese, Chinese, English, German or even sign language. In fact, the robot can be fitted with any kind of language processing system. Predecessor Aiko was stationed at a Tokyo department store where she greeted customers and provided them with basic store information. The current Chihira robots are only able to respond to visitors with pre-scripted answers but as they gain speech recognition capabilities, they will soon be able to really respond to questions. Toshiba is working on the development of a multitude of robots like Kanae, Junko and Aiko, to assist tourists during the 2020 Tokyo Olympics. Other future applications of the humanoid are the health sector and care for the elderly.

4. How ‘human’ should robots be?

Surveys held in Japan and the US have indicated that there are huge differences of opinion when it comes to what humanoid robots should to look like. The Japanese really seem to want the robots to be indistinguishable from humans while people in the West generally prefer to know that they are dealing with a robot. According to Noel Sharkey, roboticist at the University of Sheffield, Toshiba’s Kanea falls ‘clearly on this side of uncanny valley.’ The expression explains the phenomenon of people feeling uncomfortable or ‘creeped out’ when a robot looks too much like a human being.

Robots fulfilling care roles will most likely become more and more human-like, and not only from an appearance perspective. Robotics researchers agree that robots in this field would have to be capable of recognising and understanding speech and facial expressions, as well as display some type of personality or social behaviour. But where will we draw the line? Do we really want robots to be more autonomous, take more initiative, have more personality and look exactly like humans? Do we want them to be conscious ‘beings’, capable of emotions? Some argue yes, as it would make them more aware when dealing with people. They will be able to sense dangerous situations or emergencies and act accordingly. Others argue that we shouldn’t give them too much intelligence and that we should keep control at all times.

5. What level of autonomy are we willing to give our humanoid robots?

Perhaps it’s about finding a balance. Depending on what the robot’s task will be and in which environment it will be deployed, it can be fitted with varying degrees of human-like features, intelligence, social skills and autonomy. Interesting research has been done into the levels of initiative people want their robot to display during a cleaning task. A study at the university of Hertfordshire, ‘Who is in charge? Sense of control and Robot anxiety in Human Robot Interaction’, revealed that the more controlling and anxious a person was about robots, the more initiative he actually expected from the robot, and the more he was willing to delegate tasks to it. Quite paradoxical.

Participants of the study were able to choose between the following options:

- Manually switching on the cleaning robot

- Remotely instructing the robot to switch itself on

- The cleaning robot switching itself on when it noticed cleaning work was needed

The results of the study were interesting: most of the more ‘robot-anxious’ participants actually wanted their cleaning robot to perform the task autonomously, without having to instruct it. An explanation for this paradoxical result could be that we are already quite familiar with autonomous or semi-autonomous technology. Examples are our smartphones, smart appliances and wearables. Smart companion robots are then really little more than the next step. We will soon be able to buy them like we buy appliances and tablets and ultimately there will be one to suit everyone’s requirements.

6. Teaching robots human values to enable them to make judgements

Although we are becoming increasingly used to autonomous or semi-autonomous technology, we still need to ensure AI makes decisions compatible with our human values. The decisions need to be properly structured so that they don’t turn the horrors we see in movies into reality. Selman and Joseph Halpern, investigators at the Centre for Human-Compatible Artificial Intelligence at Berkeley University in California, are collaborating with scientists and experts in philosophy, economics and social sciences. Their research is focused on the area of computer science called ‘decision theory’. One of the projects they’ve worked on is making Tesla Motors’ autonomous vehicles safer, which includes decision making factors and moral dilemmas. Self-driving vehicles need to make decisions that involve taking risks such as when to overtake a slow moving car in front of them. One of the many challenges around robot decision making they faced was: should your autonomous vehicle protect you, the driver, even if it means killing other road users in the process?

7. Of responsibility, taking blame and learning from mistakes

Another challenge is the matter of responsibility. As long as a robot or other machine is owned by someone and instructed by that person to perform tasks, the worst that could happen is that the owner is faced with product or user liability if something goes wrong. But what happens, if we imagine a sci-fi scenario, if robots are fitted with, or have developed ethics and moral competence? Holding someone responsible is part of social regulation. We blame others, hoping they are sensitive to moral criticism and change their future behaviour. A robot will only be able to take blame if behavioural changes are built into its intelligence, if it has the ability to be responsive to criticism. If not, it could not have the ‘social being’ status and would be excluded from our community. But instead of building fear into their architecture as motivator for being ‘moral beings’, we should perhaps equip robots with the will to be the most competent of moral beings.

If only we could do the same with humans.