- Context awareness takes AI assistants to the next level

- Meta’s AI-powered smart glasses could change the way you look at the world

- Could Humane’s Ai Pin make smartphones obsolete?

- This AI can predict when you’re going to die

- The risk of AI manipulation

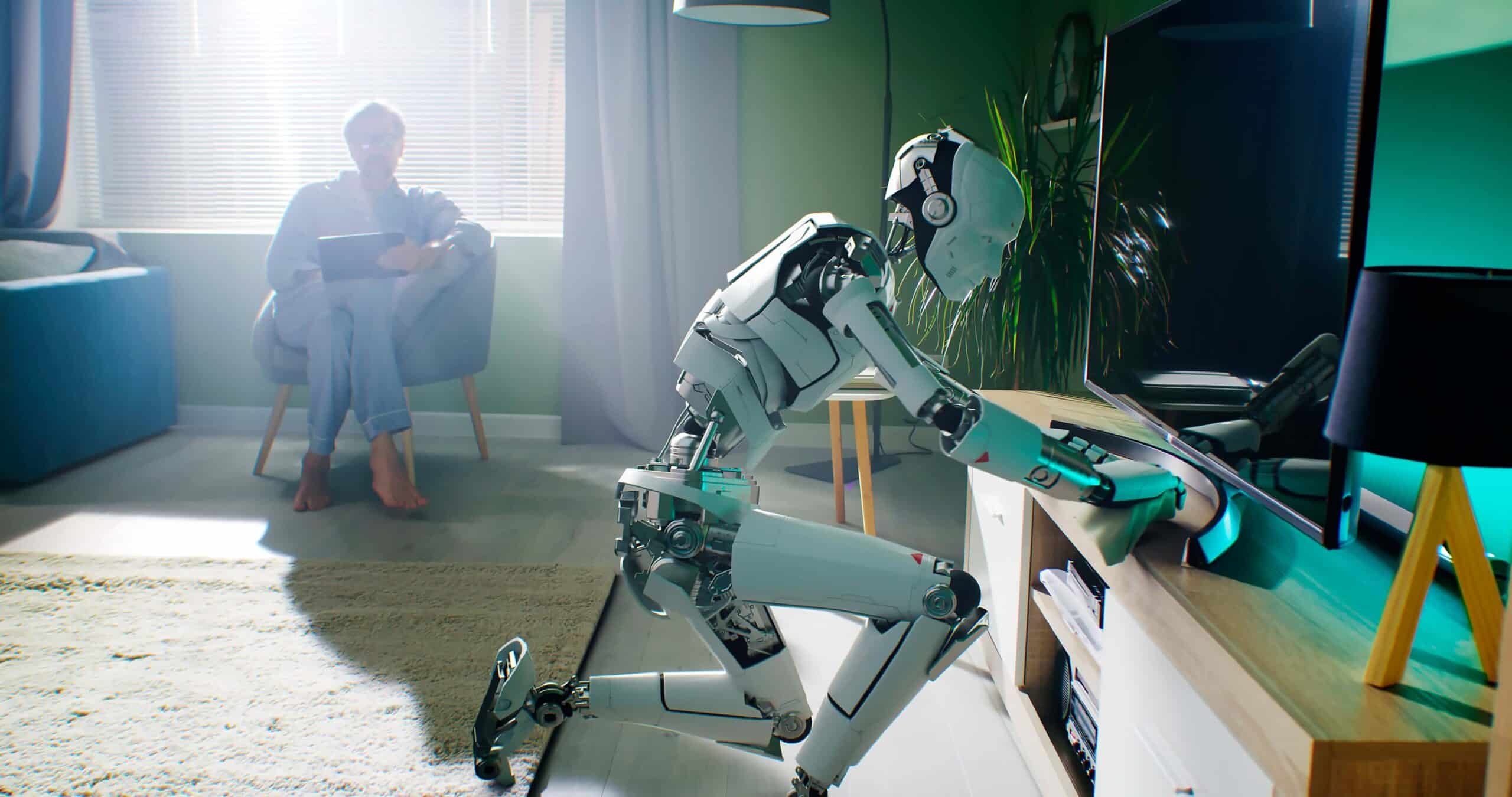

How would you feel if your every decision, interaction, and thought were subtly shaped by an ever-present AI companion? While this might sound like a futuristic fantasy, it’s actually much closer to reality than you might think. In the near future, AI assistants will become our constant companions, offering advice and insights on everything from shopping decisions to social interactions. This fusion of human and artificial intelligence, also referred to as augmented mentality, promises to be the next leap forward in our relationship with technology. Imagine an AI that doesn’t just understand your preferences but anticipates your needs, offering real-time counsel as naturally as if it were coming from an old friend.

It could remind you of your partner’s favourite cake as you pass by the bakery or provide the perfect fact to share during a work presentation. This digital friend will be there in the quiet moments, too, like when you’re choosing between a healthy meal and a guilty pleasure. For some, the prospect of such a partnership is exhilarating — a chance to enhance our mental capabilities beyond what we’ve ever imagined. For others, the idea of an AI with such intimate access to our lives is deeply disconcerting. In this article, we’ll look at the intricacies of this emerging reality, weighing the promise of a tech-enhanced life against the risks associated with over-reliance on our digital assistants. As we step into this brave new world, we’ll confront the ultimate question: is augmented mentality the key to unlocking our full potential, or does it mean we are slowly but surely succumbing to machines?

“By the end of 2024, using AI assistants to help draft documentation, summarise documents, or reformat data will be as natural as using a spelling or grammar checker today”.

Chris Anley, chief scientist at NCC Group

Context awareness takes AI assistants to the next level

When we think of AI assistants, it usually brings to mind simple, voice-activated tools like Siri or Alexa. These early versions of conversational AI technology had very limited capabilities that only allowed them to perform some basic tasks in response to our verbal commands. However, that may be about to change. The next generation of AI assistants will be equipped with something called ‘context awareness’, a sophisticated feature that will enable them to comprehend and respond to the user’s immediate surroundings. Sensors, microphones, and cameras embedded into various wearable devices you have on your body, will enable AI assistants of the future to offer real-time, relevant information that aligns perfectly with everything that you see, hear, and do.

You want to know which shoes the person sitting at the table next to you is wearing, but you’re too embarrassed to ask them directly? You bumped into a person that looks familiar, but you can’t remember their name? You want to make a decent meal for your significant other, but you’re not sure what you can do with the ingredients you currently have in your fridge? Don’t worry, your AI assistant will know the answers to all of these questions — and much more besides. “By the end of 2024, using AI assistants to help draft documentation, summarise documents, or reformat data will be as natural as using a spelling or grammar checker today”, says Chris Anley, chief scientist at NCC Group.

This level of assistance, integrated so fluidly into our cognitive processes, promises to feel less like a tool and more like an innate cognitive extension — a veritable superpower, if you will. However, the very idea of a system that is always on, always watching and listening, does raise some red flags about privacy and personal freedom. So, as these devices become more integrated into our lives, it’s essential that we establish robust safeguards to protect our personal space and maintain some control over how we interact with this technology — and how it interacts with us.

Meta’s AI-powered smart glasses could change the way you look at the world

The integration of AI technology into consumer products has accelerated significantly in recent years, as exemplified by a growing number of companies that are experimenting with embedding wearable devices with cameras, microphones, and motion sensors. This enables them to provide the wearer with context-aware assistance as they go about their everyday activities. Given that they align with the wearer’s line of sight, eyeglasses have emerged as a prime candidate for the placement of these sensors, allowing the AI to ‘see’ and ‘hear’ everything the person does. One of the companies at the forefront of this trend is Meta, which recently joined forces with Ray-Ban to develop a pair of AI-powered smart glasses that could forever change the way we interact with the world around us.

On the outside, the glasses look like an ordinary pair of Ray-Bans, sporting the timeless aesthetic the brand has become world-famous for. But this is where the similarities end, as the new glasses are also equipped with numerous smart features, including the ability to capture photos and videos, play audio, and stream to social networks, all thanks to the integrated 12-megapixel cameras. And that’s not all. Probably the most intriguing feature is the integration of Meta’s AI, which enables you to ask questions and receive instant answers. For example, you could ask it to identify the building you are looking at or to tell you which ingredients were used to prepare the meal on your plate. But how does all of this work exactly?

To launch the AI, you first need to say ‘Hey Meta, take a look at this’ and then ask it what you want to know. The AI then proceeds to take a photo of whatever it is you’re looking at, after which it uses generative AI to analyse the photo and identify the elements that appear in it. There are also some useful functionalities that go beyond simply identifying elements in a photo. You can, for instance, ask the AI to take a look at what you’re currently wearing and suggest items of clothing that would complete the look. The company doesn’t plan to stop there. “Right now, the glasses, from a power standpoint, you have to activate them”, explains Andrew Bosworth, Meta’s CEO. “Over time, we’ll get to the point where we have sensors that are low power enough that they’re able to detect an event that triggers an awareness that triggers the AI. That’s really the dream we’re working towards”.

“AI now has become something that everyone is curious about and really wants to know how it’s going to change their life. We’re offering the first opportunity to bring it with you everywhere”.

Bethany Bongiorno, the CEO of Humane

Could Humane’s Ai Pin make smartphones obsolete?

While Meta is focusing on eyewear, the San Francisco-based startup Humane has gone in a slightly different direction. The company developed a wearable pin equipped with cameras and microphones, which enable it to identify objects in its surroundings. Reminiscent of Star Trek’s iconic communication badges, the Ai Pin is a small, square-shaped device that can be attached to the wearer’s shirt, collar, or some other piece of clothing. It’s powered by a new operating system called Cosmos and features a digital assistant built on OpenAI’s generative AI technology. This allows it to perform a wide range of tasks, including sending text messages, making phone calls, taking photos, playing music, or even translating conversation from one language to another in real time — almost everything a smartphone does.

Does this mean that Ai Pin could one day make smartphones obsolete? Its creators certainly seem to think so. Activated by a simple tap or voice command, the device is also capable of projecting an interactive interface onto nearby surfaces, such as the palm of a hand or the surface of a table. “AI now has become something that everyone is curious about and really wants to know how it’s going to change their life”, says Humane CEO Bethany Bongiorno. “We’re offering the first opportunity to bring it with you everywhere. It’s really touching people from every background, every age group, globally, in terms of what we’re feeling and seeing in feedback”. Set to start shipping in March 2024, the device will cost $699, with an additional $24 monthly subscription that bundles a wireless plan.

Another device that could eventually replace the smartphone is the Rabbit R1. Unveiled at CES 2024, the square-shaped device is equipped with a 2.88-inch touchscreen and a rotating camera, which can be used to take photos and videos. To control the device, you just need to hold the side button and then issue a simple voice command. For example, you can ask it to play music, call a taxi, order takeout, send text messages, and place phone calls. While this may not sound particularly impressive, it’s the way the device does these things that makes it stand out from the competition. Unlike other devices in this category, which are all powered by large language models, Rabbit R1 is based on something called a large action model (LAM). Essentially, an LAM is an algorithm that can understand and replicate a wide range of human actions in all sorts of computer applications. For example, if you ask it to play a song on Spotify, it will automatically connect to the service, search for the song you asked for, and play it through the device’s speaker. Best of all, you don’t even need to have the app installed. All you have to do is link your Spotify account through Rabbit’s online portal, and the device will take it from there. The same process can be applied to almost any other application, allowing you to control all of your apps and services through one single interface.

This AI can predict when you’re going to die

Having an AI helper that can anticipate your every need and provide assistance where necessary will probably sound like a great idea to just about anyone. But what if that same AI could also predict exactly when you are going to die? Would you still think it was a good idea? A team of researchers from DTU, the University of Copenhagen, ITU, and Northeastern University have developed an AI model that can accurately predict future events in a person’s life, including their time of death, by analysing events that have taken place in their past. Named life2vec, the new AI model is based on technology similar to the one behind ChatGPT, with extensive health and labour market data from more than 6 million people used as its training data. “We used the model to address the fundamental question: to what extent can we predict events in your future based on conditions and events in your past?” explains Professor Sune Lehmann, a researcher at DTU. “Scientifically, what is exciting for us is not so much the prediction itself, but the aspects of data that enable the model to provide such precise answers”.

According to the researchers, life2vec’s responses were fairly consistent with the findings provided by social science. For example, leadership positions and elevated income levels were among the characteristics that the model identified as indicative of lower mortality risk, while having mental health issues as well as being male were linked to a heightened risk of premature death. “What’s exciting is to consider human life as a long sequence of events, similar to how a sentence in a language consists of a series of words”, adds Lehmann. “This is usually the type of task for which transformer models in AI are used, but in our experiments, we use them to analyse what we call life sequences, i.e., events that have happened in human life”. Reflecting on the intricacies of life sequences also prompts introspection. Contemplating the knowledge of our own mortality naturally leads to soul-searching: How would such awareness influence our perception of life’s journey? Would it compel us to reevaluate our priorities and the choices we make along the way?

“AI is a global challenge that needs a global response, and while AI offers endless opportunities, we must make sure that the adoption of AI practices is done responsibly and with cybersecurity in mind”.

Sian John, chief technology officer at NCC Group

The risk of AI manipulation

While it’s true that context-aware AI assistants could provide a wide range of benefits, their use also raises some serious ethical concerns, the biggest of which is that the technology may be used to manipulate us. If left unchecked, they could become the voice of corporate interests, weaving targeted conversational advertising into the fabric of everyday life to influence our decisions and behaviour through subtle suggestions and nudges. Policymakers, thus far, have scarcely addressed the issue of AI manipulation, a critical oversight given the technology’s surge in popularity in recent years. “AI is being deployed across ways that impact all areas of our life, which brings significant risks if not controlled properly”, says Sian John, chief technology officer at NCC Group. “AI is a global challenge that needs a global response, and while AI offers endless opportunities, we must make sure that the adoption of AI practices is done responsibly and with cybersecurity in mind”.

Some people will probably try to escape the AI’s influence by simply refusing to embrace the technology. However, by doing so, they run the risk of putting themselves at a serious social and professional disadvantage, especially once the technology becomes more widely adopted, which is more than likely going to happen. Others may not even be afforded this choice, as the technology could be priced out of their range, leading to the emergence of a new social order where our mental and social capabilities are augmented by AI tools and those who cannot afford them are relegated to the fringes of society.

“Technology regulators often fail to protect the public by focusing on the familiar dangers rather than the new dangers. This happened with social media, where regulators looked at influence campaigns on social media [i.e. advertising] and considered the issue to be similar to traditional print, television, and radio advertising. What they failed to appreciate is that social media enables targeted influence, which was a totally new danger and contributed to the polarisation of society”, says Louis Rosenberg, the CEO of Unanimous AI. “Yes, it’s a danger that AI tools have made it far easier to create fake photos, fake audio clips, fake videos, and fake documents than ever before. But this is not a new danger. The new danger is generative AI is enabling an entirely new form of influence — interactive conversational influence — in which users will engage chatbots, voice bots, and soon photorealistic video bots, that will be able to convey misinformation and disinformation through interactive conversations that target users individually, adapting to their personal interests, values, political leanings, education level, personalities, and speaking style”.

Closing thoughts

As we edge closer to a future where artificial intelligence blends more and more into our everyday lives, we can’t help but get excited about the possibilities. We’re looking at a world where AI could know us better than we know ourselves, enhancing our decision making and even giving us a heads-up about health issues before we’re aware of them. But let’s not forget that this comes with its own set of worries. How much of our personal lives are we willing to share with AI? And who’s making sure that this powerful tool doesn’t fall into the wrong hands? What we also need to be aware of is that it’s not just about who has access to these technologies but also about who doesn’t. We must be careful that the advancements in AI don’t end up creating an even bigger gap between the haves and the have-nots. As we move forward with integrating AI into our lives, we’ve got to keep our eyes wide open. We need to make sure we’re writing the rules as we go to keep this amazing tool working for us — all of us — and not against us. It all boils down to this: Can we keep our human touch in a world where machines are designed to think and act like us? What does it mean to be human in an age where our thoughts can be predicted and our choices influenced by the very technology we rely on?