- Executive summary

- The rise of the droids

- Leading by (virtual) example

- Google DeepMind’s ‘virtual playground’

- Why is my droid watching YouTube?

- CMU’s video learning suite

- Steering the robots towards success

- Toyota teaches robots via teleoperation

- Act like a human and fold your laundry

- UC Berkeley’s ‘SpeedFolding’ robot

- Trained to tackle the future

- Learnings

Executive summary:

The growing complexity of the tasks we assign to robots means we may soon be unable to rely on robots that run on rigid rules-based programming. Instead, a shift to observational learning may be required to teach them new tasks. While such a development may have been unthinkable ten years ago, today a wide variety of organisations are taking giant steps towards making this a reality.

- Google DeepMind has deployed a virtual playground inside which it coaches AI.

- CMU is using video-based learning to offer practical demonstrations to robots on how to carry out tasks.

- Toyota literally takes control of the robot, a practice known as ‘teleoperation’, to walk it through its duties.

- UC Berkeley’s robot can mimic the fine motor tasks of a human, enabling it to fold laundry at remarkable speed.

- Widespread roboticisation may lead to difficult questions about human purpose, for which there is no easy answer.

Robotics is advancing at a dramatic pace, and it is likely we will need to shift towards observational learning to coach droids on the tasks we expect them to carry out in society. Proceeding down this path will not be easy, but provided we temper our excitement with a sense of caution, a future where the rise of advanced robotics benefits everybody remains in sight.

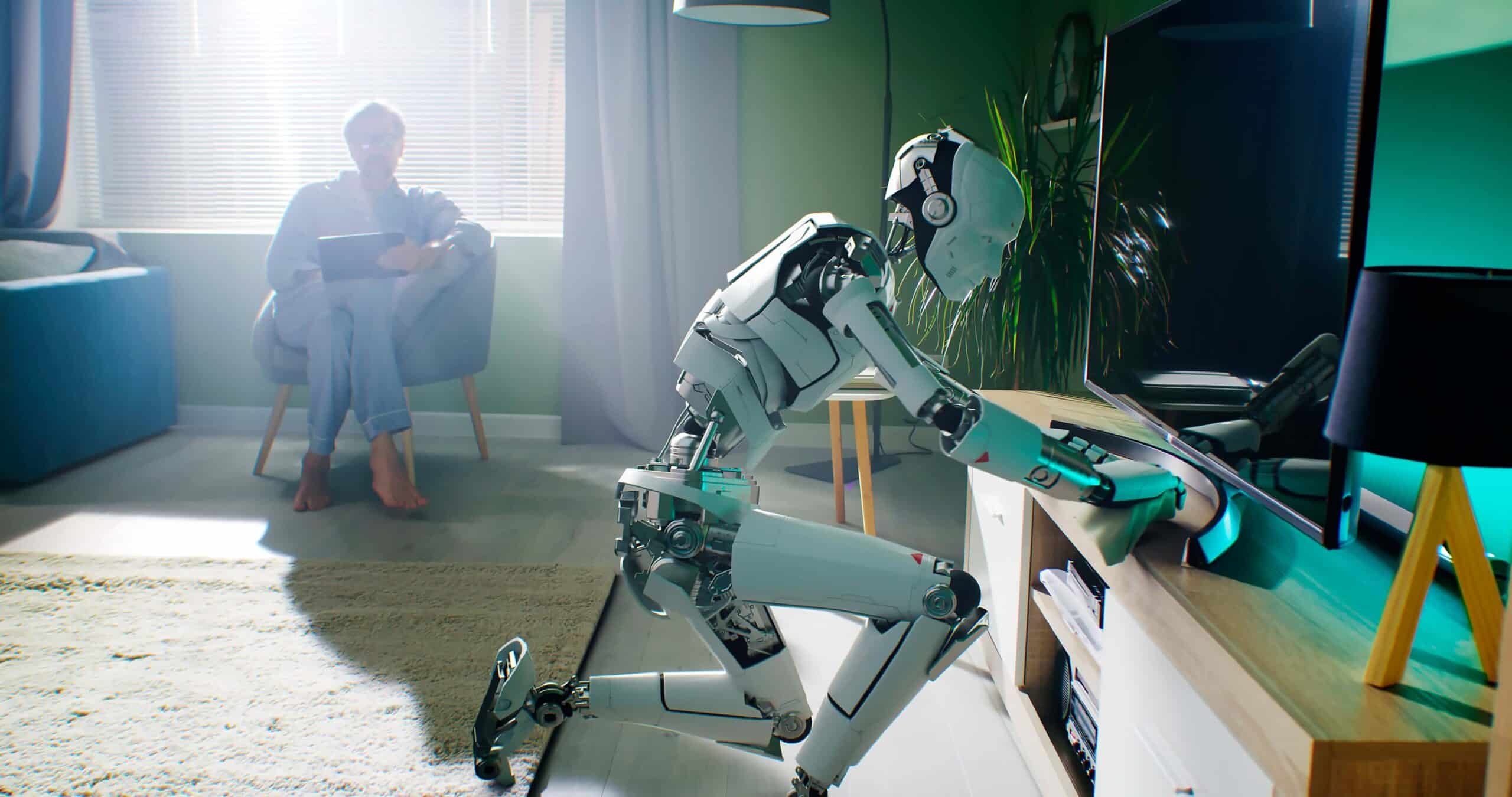

The rise of the droids

A new era could be dawning in the field of robotics, where robots learn not by code but by imitation. This leap forward is powered by generative AI, an ingenious force that enables machines to observe and replicate complex human behaviours. These robots can now acquire skills in real-time by analysing and imitating actions seen in the physical world or through digital mediums like videos. Anything from simple gestures to complex patterns are fair game for the algorithm.

Shifting away from rules-based human instructions to observational learning opens a myriad of possibilities. Ten years from now, there could be robots in healthcare learning directly from surgeons to assist in operations, or in art studios tutoring students the basics of brushstrokes. No matter what industry you look at, the applications appear to be limitless.

At the heart of this revolution is reinforcement learning, a process by which robots refine their abilities through trial and error. It’s really not so different from what humans do when learning a new skill. By blending generative AI with this approach, however, we could see robots capable of learning more quickly than a human, and with a significantly higher skill ceiling. In this article, we’ll venture to the bleeding edge of this technology and how it is being deployed today. We’ll also peer into the future and consider how autonomous learning might hold the answers to tomorrow’s biggest challenges.

Leading by (virtual) example

What’s the safest way to teach a robot how to carry out its duties? Probably in a simulated environment.

What does it take for a robot to successfully mimic human behaviour? Well, it all starts with data—lots of it. Typically, robots are first fed vast amounts of information about human actions and reactions across a wide range of contexts. This data is then processed using deep learning algorithms to extract relevant patterns and features. Through repeated training, the robot’s neural network can gradually refine its understanding of human behaviour, enabling it to produce increasingly accurate and natural-looking responses.

However, true mimicry requires more than just simple pattern recognition. Robots must also be able to adapt and improvise when faced with situations that haven’t been specifically included in their programming. In other words, they need to exhibit the same kind of flexibility and creativity that comes naturally to us humans. ‘Is this even possible?’, you might ask. While we may not be there just yet, we are getting closer to realising this goal.

Google DeepMind’s ‘digital playground’

Google DeepMind is one of the most noteworthy players in this field, setting a precedent for novel human mimicry via its GoalCycle3D digital playground. In this virtual environment, an AI can hone its craft through repeated trial and error. Unlike conventional AIs that require extensive training with countless examples to perform a simple task, DeepMind’s latest creation is learning in real-time, picking up new skills by merely observing human demonstrators. It’s a technique reminiscent of one of our most profound abilities—the power to quickly learn from each other, a skill that’s been instrumental in human progress.

In GoalCycle3D, the AI must interact with an ever-changing landscape filled with hurdles to overcome. Here, they learn not by rote repetition but by watching an expert—either a coded entity or a human-controlled one—swiftly navigate through the course. By doing so, they grasp the nuances of the task at hand, honing their skills through pure observation. After their training, the AI prove capable of emulating the expert’s actions independently: a clear indication that they’re not just memorising sequences but actually understanding the tasks. The researchers have equipped the AI with a predictive focus and a memory module to facilitate this.

While these are promising developments, translating this tech from virtual environments to real-life situations isn’t straightforward. The AI’s learning was tested under a consistent human guide during the trials, which raises the question—can it adapt to the unpredictable variety of human instruction? Moreover, the dynamic and random nature of the training environment in GoalCycle3D is challenging to replicate in the less predictable real world. Not to mention that the tasks were relatively straightforward, not requiring the fine motor skills that many real-world tasks demand.

Nevertheless, the progress in AI social learning is a tantalising development. DeepMind’s AI, with its novel learning capabilities, offers a tantalising glimpse of a future where machines could learn directly without input from a team of coders. It’s a balancing act between the formal rigours of AI development and the informal, adaptable learning that characterises human intelligence, and DeepMind is walking this tightrope with promising agility.

“The robot can learn where and how humans interact with different objects through watching videos. From this knowledge, we can train a model that enables two robots to complete similar tasks in varied environments”.

Deepak Pathak, assistant professor at CMU

Why is my droid watching YouTube?

Video tuition can prove a surprisingly effective method of teaching robots how to do things.

Let’s face it: while there are a million game-changing applications for robots out there, most of us just want something that can help around the house. Imagine having a droid that can navigate your home, doing your dishes, making the bed, and even cooking dinner. It’s the ultimate dream for anyone who’s ever felt overwhelmed by the endless list of chores and tasks that come with running a household.

Creating a robot capable of navigating the unpredictable environment of our homes is no easy task, and raw compute power isn’t going to cut it alone. Equipping the robot with sophisticated sensors that would allow it to perceive its surroundings and avoid obstacles that appear in its path is relatively simple, albeit potentially very expensive.

Humans have it easy these days. With the proliferation of online video content, there is a step-by-step tutorial for virtually any task we can think of—no matter how niche or obscure. But what if there was a way to leverage this video content in the service of robot training?

CMU’s video learning suite

Turns out, a team of researchers at Carnegie Mellon University (CMU) are taking action on precisely this. They’re teaching robots how to carry out all manner of household chores—not by their own example, but through video demonstration. This is a significant leap from more standardised methods that require laborious rules-based coding ahead of time. Leveraging large datasets like Ego4D, with literally thousands of hours of first-person footage, and Epic Kitchens, which captures kitchen activities, the team at CMU trains its robots to learn from a diverse range of human behaviours.

These datasets, previously intended for training computer vision models, are proving instrumental in teaching robots to learn from the internet and YouTube videos. “The robot can learn where and how humans interact with different objects through watching videos. From this knowledge, we can train a model that enables two robots to complete similar tasks in varied environments”, explains Deepak Pathak, assistant professor at CMU. Pathak’s team has advanced past their earlier work, known as In-the-Wild Human Imitating Robot Learning (WHIRL), which necessitated human demonstrations in the robot’s presence. Their new model, the Vision-Robotics Bridge (VRB) streamlines this process, removing the need for direct human guidance and identical environments.

Now, a robot can pick up a new task in a mere 25 minutes of practice. Shikhar Bahl, a PhD student in robotics, shares the team’s excitement: “We were able to take robots around campus and do all sorts of tasks. Robots can use this model to curiously explore the world around them”. This curiosity is directed by a sophisticated understanding of ‘affordances’—a term borrowed from psychology, indicating the potential actions available to an individual in their environment. By identifying contact points and motions, such as a human opening a drawer, a robot can deduce how to perform this action on any drawer.

While work continues apace in the field of video learning, it’s important to remember that this work isn’t simply about teaching a robot how to do the dishes. Rather, it’s about unlocking their potential to learn autonomously from the wealth of online video content. Just make sure the explicit content filter is left on.

Steering the robots toward success

Put yourself in the robot’s shoes—literally—and guide it through the proper way to carry out a task.

Have you ever tried to master a new skill and found yourself struggling, only for an authority figure to swoop in and guide your hands? It’s one of the most effective ways of relieving somebody’s anxieties about the learning process, but it’s also brilliantly useful for practical demonstration. Do you think you’d feel the same way, however, if the authority figure took control of your entire body to show you what to do?

Well, it might horrify you, but this is exactly how the robotics researchers in our next example are teaching robots to carry out their duties. This hands-on approach allows for a more intuitive and efficient transfer of knowledge, enabling robots to master skills that might otherwise be difficult to learn through traditional programming methods.

“This new teaching technique is both very efficient and produces very high performing behaviours, enabling robots to much more effectively amplify people in many ways”.

Gill Pratt, chief executive officer of TRI

Toyota teaches robots via teleoperation

The Toyota Research Institute (TRI) has developed a technology called Diffusion Policy, which allows robots to rapidly learn complex tasks such as flipping pancakes and peeling vegetables. Here’s how it works: A human will teleoperate the droid, providing it with a variety of demonstrations to learn from. The robot then processes this information autonomously, applying its new skills to tasks without further human intervention.

The Diffusion Policy technique has already empowered robots at TRI to master more than 60 different skills, from manipulating deformable materials to pouring liquids. And it’s done without having to write new code—just by feeding robots fresh demonstration data. The approach draws on the same diffusion processes used in cutting-edge image generation, reaping benefits like adaptability to multi-modal demonstrations, the capability to plan high-dimensional actions over time, and stability in training without the need for constant real-world adjustments. Russ Tedrake, TRI’s vice president of Robotics, noted that their robots are now performing tasks that are “simply amazing” and beyond reach only a year ago.

With that said, Diffusion Policy remains a work in progress. Indeed, the robots sometimes falter when applying their skills outside of a controlled environment. Acknowledging these challenges, TRI has broadened its vision to accommodate a ‘large behaviour model’ that parallels the sophistication of large language models in understanding and generating written content. With the ambitious aim of teaching robots a thousand skills by 2024, TRI is forging a path toward a future where robots with a rich repertoire of behaviours work seamlessly alongside humans.

Act like a human and fold your laundry

Teach a robot to fold laundry, and you’ll never have to do it yourself again.

By now, you may have noticed a recurring theme: everybody wants robots to do their chores. Sometimes, it can seem as though the ultimate goal of every single roboticist on Earth is simply to never fold their own laundry again. If you thought this next example was going to convince you otherwise, congratulations—you guessed incorrectly.

However, there’s more to it than just laziness or a desire for more convenience. Having a robot that could do your chores for you would significantly improve the quality of life for millions of people around the world who are struggling with physical limitations or disabilities. Isn’t that something worth striving for? The trouble is, folding your laundry requires a surprisingly high level of dexterity, and that’s what researchers are currently taking aim at.

UC Berkeley’s ‘SpeedFolding’ robot

At UC Berkeley, a revolution is unfolding in the laundry room. The university’s Autolab has unveiled ‘SpeedFolding’, a breakthrough methodology that trains robots to fold laundry. In a world where washing and drying machines have long taken the drudgery out of laundry day, the final frontier of folding has remained stubbornly manual. SpeedFolding aims to consign this chore to the annals of history, boasting a record-breaking speed of folding 30-40 garments per hour, an impressive increase from the previous benchmark of a mere 3-6 folds.

Thing is, folding laundry is not as straightforward as it seems. The variety of clothing shapes and materials, from the thin and slippery to those prone to static cling, makes it an ideal candidate for training robots on complex tasks. UC Berkeley’s approach has mastered these hurdles with a system that mimics the human process of folding—analysing over 4,300 actions to predict the shape of laundry items from a crumpled heap, smoothing them out, and then folding them along precise lines.

Central to this innovation is the BiManual Manipulation Network (BiMaMa), a neural network that guides two robotic arms in a harmonious dance of complex movements. The dual-arm system mimics the coordination found in human limbs, allowing for the efficient smoothing and folding of garments into organised stacks. The success rate? An impressive 93%, with most pieces neatly folded in less than two minutes. While this system wasn’t benchmarked against the average teenager’s folding prowess, its efficiency and accuracy are undoubtedly superior. As UC Berkeley continues to refine this methodology, the day when robots seamlessly integrate into our homes to assist with everyday chores grows ever closer. Roboticists be praised.

Trained to tackle the future

Robots can assist humans in countless ways. But could they one day replace us?

By now, there should be little doubt as to how much robots are set to transform our home lives. The thing is, folding laundry is a trifling matter compared to some of the bigger challenges these AI-driven robots could help us with. In a world afflicted by global warming, robots could take the place of human emergency responders, saving lives in situations that could otherwise prove lethal. They could even be deployed in space alongside astronauts to assist with difficult collaborative tasks, or to conduct repairs that could potentially risk human life. Boston Consulting Group projected in 2021 that the advanced robotics market will skyrocket in value, going from a baseline of US$26bn to as high as US$250bn by the decade’s end.

It is, however, essential to bear in mind that while these robots may be able to mimic us effectively, they can never replace us. This cannot be ignored: McKinsey has predicted that as many as 50% of all human jobs will be lost to robots and automation by 2050. People need purpose, and not everybody who is displaced by the rise of robots will be comfortable transitioning into life as a carefree artist. What it means for employment in sectors that traditionally rely on human labour remains an unanswered question. How do we ensure that ethical considerations keep pace with robotics—especially our privacy and autonomy? This question is not rhetorical, but an essential checkpoint on our journey forward.

Learnings:

The pace of technological development in robotics is moving with increasing rapidity, and it seems as though our kitchens and laundry rooms will be affected first. The implications of these developments extend far beyond mere convenience; they invite us to reconsider our relationship with machines and, perhaps more importantly, with our conceptions of ourselves.

- No standardised method for observational learning in robots has yet been established.

- The three most promising approaches are virtual environment learning, video tutorials, and teleoperation.

- Robots are increasingly capable of conducting tasks requiring fine motor function, once an extremely difficult task to accomplish.

- BCG predicts that the advanced robotics market could grow tenfold by 2030, reaching up to US$250bn in value.

- Up to half of all human jobs could be made obsolete by 2050, necessitating careful consideration of how we integrate robots into society.

It goes without saying that the potential benefits are tantalising. Having said that, it’s not just about what machines can do for us but also about what this means for the future of human potential. To ensure an equitable future for all, we must think carefully about the kind of world we want to create with these advanced robots.